Does A Greedy Intel Driver Improve Performance?

Starting with the QGears2 using the X Render extension, the gears test exhibited the best performance when greedy migration heuristics were used with EXA on the xf86-video-intel 2.6.3 driver. In the default mode, the default frames per second was 10.22 but with the greedy mode that jumped up to 15.48 FPS. This is in comparison to the xf86-video-intel 2.4.1 driver with EXA where it ran at 15.22 FPS. While UXA is designed to be faster than EXA and use the GEM memory manager, not everything is optimized. In this test, UXA was actually slower than EXA.

UXA jumped out of last place with the text test where it delivered the best performance. The UMA Acceleration Architecture had an average FPS of 9.74 while the EXA default was at 7.21. Enabling greedy migration heuristics actually caused a performance drop with EXA to just less than five FPS. The older xf86-video-intel 2.4.1 driver ran at 6.44 FPS.

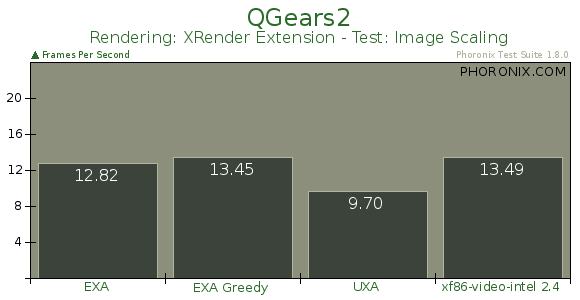

UXA regressed again when it came to using X Render with image scaling through QGears2. EXA had an average FPS of 12.82 and dropped to 9.70 FPS with UXA. The EXA greedy contender performed better, but it remained slower than the xf86-video-intel 2.4.1 driver.

A greedy Intel driver one bearing UXA led to slower 2D performance in our first GtkPerf run. UXA was twice as slow as the other test settings while greedy had just slowed down the performance by a percent or two. Lastly, the older 2.4 Intel driver delivered the best performance.