Llamafile 0.8 Releases With LLaMA3 & Grok Support, Faster F16 Performance

Llamafile 0.8 is an exciting release with support for LLaMA3, Grok, and Mixtral 8x22b added.

Mixture of Experts (MoE) models like Mixtral and Grok are also now 2~5x faster for executing on CPUs after refactoring the tinyBLAS CPU code. There is also around 20% faster F16 performance on the Raspberry Pi 5, around 30% faster F16 performance on Intel Skylake, and around 60% faster F16 performance on an Apple M2.

Llamafile 0.8 also brings improved CPU feature detection and other enhancements:

- Support for LLaMA3 is now available

- Support for Grok has been introduced

- Support for Mixtral 8x22b has been introduced

- Support for Command-R models has been introduced

- MoE models (e.g. Mixtral, Grok) now go 2-5x faster on CPU

- F16 is now 20% faster on Raspberry Pi 5 (TinyLLaMA 1.1b prompt eval improved 62 -> 75 tok/sec)

- F16 is now 30% faster on Skylake (TinyLLaMA 1.1b prompt eval improved 171 -> 219 tok/sec)

- F16 is now 60% faster on Apple M2 (Mistral 7b prompt eval improved 79 -> 128 tok/sec)

- Add ability to override chat template in web gui when creating llamafiles

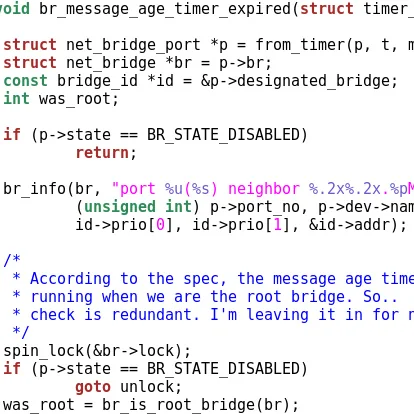

- Improve markdown and syntax highlighting in server

- CPU feature detection has been improved

Llamafile 0.8 downloads via GitHub. I'll be working on new Llamafile benchmarks soon.

1 Comment