Originally posted by Blademasterz

View Post

Announcement

Collapse

No announcement yet.

Rust-Written Coreutils 0.0.25 With Improved GNU Compatibility

Collapse

X

-

Are we comparing apples with apples? If we compare separate executables vs. single binary, with no other differences (i.e. both builds use dynamic linking against system libs) then yes, the single binary is more size-efficient. Otherwise it isn't guaranteed at all.Originally posted by Quackdoc View Post

This isn't true. while yes you can save some space dynamic linking, it isn't as much as you save by building a single binary with LTO.

- Likes 1

Comment

-

Thank you, that's very informative. And a bit worrying. If LLVM has a problem generating code for Rust, that's not something LLVM guys were willing to fix in a timely fashion in the past.Originally posted by jacob View Post

Last week's This Week in Rust has an interesting blog post about this:

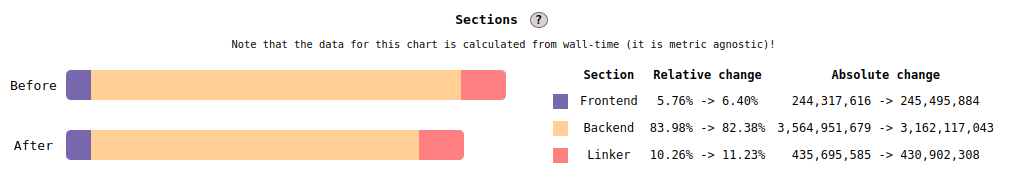

Rust compilation times is an ongoing topic that generates many discussions online1. Most people don’t really care about what exactly takes time when compiling Rust program; they just want it to be faster in general. But sometimes, you can see people pointing fingers at specific culprits, like LLVM or the borrow checker. In this post, I’ll try to examine who is the culprit in various situations, based on data gathered from a fun little experiment. Probably also offline. ↩

Rust compilation times is an ongoing topic that generates many discussions online1. Most people don’t really care about what exactly takes time when compiling Rust program; they just want it to be faster in general. But sometimes, you can see people pointing fingers at specific culprits, like LLVM or the borrow checker. In this post, I’ll try to examine who is the culprit in various situations, based on data gathered from a fun little experiment. Probably also offline. ↩

Contrary to a popular misconception, it's apparently not the borrow checker (or the other static checks) that takes so much time, it's the codegen.Last edited by bug77; 25 March 2024, 12:05 PM.

- Likes 1

Comment

-

I think now that Rust has gained a lot of traction, either the LLVM team will put a higher priority on addressing it, or the big players who rely on Rust like Google, MS etc will do something about it and push it upstream. But it's not only a LLVM problem either. It's well known that the LLVM codegraph generated by rustc is incredibly complex and the LLVM toolchain then spends a lot of time processing and optimising it. Since the implementation of MIR, the rustc team is working on that as well.Originally posted by bug77 View Post

Thank you, that's very informative. And a bit worrying. If LLVM has a problem generating code for Rust, that' not something LLVM guys were willing to fix in a timely fashion in the past.

- Likes 2

Comment

-

Do you mean lesser quality contributions (that is, the same number of contributions, but each is of lower quality), or fewer (quality) contributions (that is, not so many contributions, but of the same quality)?Originally posted by Alexmitter View Post

Thats a downside. It just means that overall it will get less quality contributions.

Gooder English makes things smaller difficult to belowstand.

- Likes 1

Comment

-

yeah ok, but how many people use it? the 10000 commits are relative to the cports, and the rest of the base system? I don't even see Linux news websites talking about it anymore. Also are they not alpha quality anymore? Because this is the important part at the end of the day!Originally posted by q66_ View Post

what would count as "having success"? ~10000 commits per year by ~40 authors and packaging close to 2000 different software on 5 CPU architectures including all major web browsers and other tricky stuff in alpha phase not enough?

~10000 commits per year by ~40 authors and packaging close to 2000 different software on 5 CPU architectures including all major web browsers and other tricky stuff in alpha phase not enough?

- Likes 1

Comment

-

yes, because as I stated before, My usecase is embedded and otherwise other low(er) storage requirements. Not so low where it is necessary to use busybox over a rust alternative, but low enough that tooling like gnu coreutils, findutils etc becomes a painOriginally posted by imaami View PostAre we comparing apples with apples? If we compare separate executables vs. single binary, with no other differences (i.e. both builds use dynamic linking against system libs) then yes, the single binary is more size-efficient. Otherwise it isn't guaranteed at all.

- Likes 2

Comment

-

If you spend the bulk of your time linking then either you're doing LTO or your linker is too slow.Originally posted by swoorup View PostNot sure where you got the "Rust compilation is slower than C++" from. At best, they are both on par, since Rust has incremental compilation. However bulk of the time is spent in linking.

- Likes 1

Comment

-

You have fd-find which is more featureful and faster.Originally posted by Quackdoc View Post

coreutils is one thing, findutils is another etc. Busybox utilities composes a lot of "utils/tools" suites, from coreutils, to net-tools, procps, and many more. even if you were to combine all of uutils projects into a single binary it wouldn't be a complete busybox replacement, but it would be closer.

It is not directly compatible with find-utils though.

Comment

Comment