Originally posted by Tomin

View Post

Announcement

Collapse

No announcement yet.

AMD Arcturus Might Be The Codename Succeeding Navi

Collapse

X

-

Thing is Vega has 3.6 billion more transistors than Fury X. It only takes up less die space because it is manufactured on a better fabrication process. Both Vega 64 and Fury X have 64 CUs.Originally posted by dungeon View PostAMD maded before Fiji 596 mm, and Vega 64 as 486 mm and later is still faster than former... if they maded Vega 64 as size of Fiji they would be faster than nVidia 10, so what? What is a point of that as users would need to pay much more for it, would suck more uneeded power and so on and so on and so fort just to gain 5% of the market in whole from a less then 1% of people

Vega achieved higher performance via higher clockspeeds. Once you clock it the same as Fury X, even with Fury X's VRAM handicap there is almost no performance difference between the two, go through the benchmarks in the below article.

Vega was very hyped up in terms of architectural improvements that AMD completed failed to translate into real world performance.

Comment

-

Yes and Vega 64 was lauched at smaller price than Fury X which is correct, did you notice that?Originally posted by humbug View PostThing is Vega has 3.6 billion more transistors than Fury X. It only takes up less die space because it is manufactured on a better fabrication process. Both Vega 64 and Fury X have 64 CUs. Launch price of Vega 64 was lower than any Fury, even non X.... You know if you pay less for more that is already sign of improvement

Launch price of Vega 64 was lower than any Fury, even non X.... You know if you pay less for more that is already sign of improvement

While on nVidia high-end (which i call crazy-end, as that is more proper ), you pay more and more for more instead

), you pay more and more for more instead  If is true how you are customer of that crazy-end, then that means you are interested to pay higher and higher consumer taxes into growing bigger and bigger chips. Customers of Titan V also pay much more and much more for not so more

If is true how you are customer of that crazy-end, then that means you are interested to pay higher and higher consumer taxes into growing bigger and bigger chips. Customers of Titan V also pay much more and much more for not so more

Vega as architecture raised varios limitations, improve and add new engines, etc... why you expect more than that? Vega didn't failed at all, AMD just didn't make a chip as size and price as Fury so you can spot a difference more clearly. If fastest of a serie, isn't faster than previous bigger chips that does not mean how there are not improvments.Vega was very hyped up in terms of architectural improvements that AMD completed failed to translate into real world performance.

You should really ignore bigger and expensive chips from architectural comparison like Fury, these are exclusives so exceptions Last edited by dungeon; 30 September 2018, 08:25 AM.

Last edited by dungeon; 30 September 2018, 08:25 AM.

Comment

-

I was talking about whether the product reached the engineering/technical/performance objectives that they set out to do. Obviously AMD will never tell us that but it looks fairly certain that it didn't. They launched their architecture so much later than the nvidia competition and still had to clock vega64 far outside the optimal range just to hit performance parity with the gtx 1080 which was not even nvidia's flagship.Originally posted by dungeon View PostYes and Vega 64 was lauched at smaller price than Fury X which is correct, did you notice that? Launch price of Vega 64 was lower than any Fury, even non X.... You know if you pay less for more that is already sign of improvement

Launch price of Vega 64 was lower than any Fury, even non X.... You know if you pay less for more that is already sign of improvement

I was not talking about the product positioning or pricing. Anyway it didn't matter cause nobody could buy it at MSRP.

I agree. And I think nvidia is doing a lot of damage to the pc gaming ecosystem by making it more and more expensive and more elitist to be a pc gamer. This must change or else on the longterm it will push people away from PC and towards consoles, and it means less PC games. They are poisoning their own market.Originally posted by dungeon View PostWhile on nVidia high-end (which i call crazy-end, as that is more proper ), you pay more and more for more instead

), you pay more and more for more instead  If is true how you are customer of that crazy-end, then that means you are interested to pay higher and higher consumer taxes into growing bigger and bigger chips.

If is true how you are customer of that crazy-end, then that means you are interested to pay higher and higher consumer taxes into growing bigger and bigger chips.

I am an AMD gpu user btw, and have no intention of switching to Nvidia, because AMD just works better since I use both windows and linux. My graphics card (sea islands R9 290) has served me very well on both windows and linux and driver support is good. I just want to see RTG do a lot better than Vega. I look forward to Navi.

Vega clocked higher and had better performance per watt than Fiji. But per clock cycle all the theoretical performance improvements failed in real world application. AMD got lucky that the mining boom hit and they were able to sell these GPUs.Originally posted by dungeon View PostVega as architecture raised varios limitations, improve and add new engines, etc... why you expect more than that? Vega didn't failed at all, AMD just didn't make a chip as size and price as Fury so you can spot difference. If fastest of a serie, isn't faster than previous bigger chips that does not mean how there are not improvments.

You should really ignore bigger and expensive chips from architectural comparison like Fury, these are exclusives so exceptions

See below

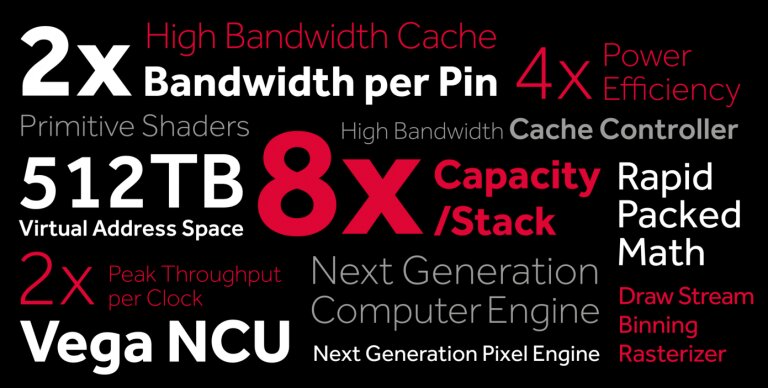

From the official AMD whitepaper on Vega one of the first lines reads as such: "Although this unit count may be familiar from prior Radeon GPUs, the combination of higher clock speeds and improvements in the "Vega" architecture can improve instruction throughput substantially." From this statement alone we’d expect the instruction throughput to be better.

The whitepaper also goes on to state: "For instance, the fixed-function geometry pipeline is capable of four primitives per clock of throughput, but "Vega’s" next-generation geometry path has much higher potential capacity." Where’s that capacity?

Vega has a new primitive shader support that should enable more than four times the peak primitive cull rate per clock cycle. Vega allows fast paths through hardware by avoiding unnecessary processing. There is even more, including an intelligent workload distributor which adjusts pipeline settings based on characteristics of the draw calls it receives to maximize utilization. This is all part of the next-generation geometry engine.

Vega has a revised pixel engine introducing the Draw-Stream Binning Rasterizer (DSBR.) This reduces unnecessary processing and data transfer on the GPU which helps to boost performance and to reduce power consumption. Think of it as tiled rendering with the benefits of immediate-mode rendering. The whitepaper says this even: " In the case of "Vega" 10, we observed up to 10% higher frame rates and memory bandwidth reductions of up to 33% when the DSBR is enabled for existing game applications, with no increase in power consumption." Where is that?

L2 cache sizes are higher on Vega versus previous GPUs. L2 cache size is 4MB, which is twice the size of L2 in previous high-end AMD GPUs.

Then there are features like the High-Bandwidth Cache Controller (not working) and Rapid Packed Math (no games out that use it yet.)

When you look at all the above architecture changes and benefits over previous high-end AMD GPUs you have to ask yourself where are those and why aren’t they making a bigger difference? Either the features are broken, turned off, not working, or its advantages were simply over-marketed. As it is right now, the end result, is that the clock speed advantage of Vega is what is holding it up, but actually holding it up quite well. Clock speed has long been king in the world of gaming and that seems to still be an incredibly important factor today.

https://m.hardocp.com/article/2017/0...x_clock_for/15Last edited by humbug; 30 September 2018, 08:33 AM.

Comment

-

So why you care about what bigger inflated chips do thenOriginally posted by humbug View PostI am an AMD gpu user btw, and have no intention of switching to Nvidia, because AMD just works better since I use both windows and linux. My graphics card (sea islands R9 290) has served me very well on both windows and linux and driver support is good. I just want to see RTG do a lot better than Vega. I look forward to Navi. . If you want same price, that R9 290 had launch price of $399 is class of Vega 56 as that also have the same launch price, you would see a lot of improvments between these two

. If you want same price, that R9 290 had launch price of $399 is class of Vega 56 as that also have the same launch price, you would see a lot of improvments between these two

Or if you are interested in Navi, just wait for a Navi of the same price 5 years later and you will be satisfied also i guess Or if you want Navi of much more then you would need to pay more for class more, there is no other way

Or if you want Navi of much more then you would need to pay more for class more, there is no other way  Last edited by dungeon; 30 September 2018, 09:39 AM.

Last edited by dungeon; 30 September 2018, 09:39 AM.

Comment

-

i want to ses RTG doing well in the market. Not just for my personal need but for the good of the whole ecosystem. That's why i care.Originally posted by dungeon View Post

So why you care about what bigger inflated chips do then . If you want same price, that R9 290 had launch price of $399 is class of Vega 56 as that also have the same launch price, you would see a lot of improvments between these two

. If you want same price, that R9 290 had launch price of $399 is class of Vega 56 as that also have the same launch price, you would see a lot of improvments between these two

Or if you are interested in Navi, just wait for a Navi of the same price 5 years later and you will be satisfied also i guess Or if you want Navi of much more then you would need to pay more for class more, there is no other way

Or if you want Navi of much more then you would need to pay more for class more, there is no other way

read my previous post again.

Comment

-

I read it already, but dunno what else to answer other than to guess like this:Originally posted by humbug View Postread my previous post again.

Its a driver thing and since they used there "up to up to" vocabular, that also mean for some apps also might be nothing or even worseOriginally posted by humbug View PostVega has a revised pixel engine introducing the Draw-Stream Binning Rasterizer (DSBR.) This reduces unnecessary processing and data transfer on the GPU which helps to boost performance and to reduce power consumption. Think of it as tiled rendering with the benefits of immediate-mode rendering. The whitepaper says this even: " In the case of "Vega" 10, we observed up to 10% higher frame rates and memory bandwidth reductions of up to 33% when the DSBR is enabled for existing game applications, with no increase in power consumption." Where is that? then that probably is enabled for some apps via profiles. I guess, on these cases which are clearly bottlenecked by bandwidth.

then that probably is enabled for some apps via profiles. I guess, on these cases which are clearly bottlenecked by bandwidth.

I don't know details so i might be even wrong here, but especially if engine is in state revised that might also still have some degradations somewhere, worse perf or some glitches, etc... and since this is not pure tile-based renderer, then they don't put it by default enabled everywhere, but per case by profiles

Certainly 10% and 33% does not mean it is that much faster nor it saves so much everywhere, since there was a keyword up to used Last edited by dungeon; 30 September 2018, 11:54 AM.

Last edited by dungeon; 30 September 2018, 11:54 AM.

Comment

-

Debian Testing with Ubuntu PPAs and kernels from source....really? That's asking for trouble.Originally posted by debianxfce View Post

Mainline kernels and Mesa are buggy. Bug fixes and latest features are in the AMD drm-next-4.20-wip kernel and Mesa git. If you still have bugs after trying the latest software code, make a bug report. My distribution uses the latest code.https://www.youtube.com/watch?v=fKJ-IatUfis

Why not suggest Antergos or Arch with the mesa-git and llvm-svn repos and a single AUR package for the kernel? That's what I do sans the mesa-git and llvm-svn repos (running amd-staging-drm-next-git...I prefer stable release packages and stable repositories and mesa-git and llvm-svn require the testing repositories so I rarely use them).

Edit one file to add the llvm/mesa repos and testing repos (pacman.conf), sudo pacman -Syu, pacaur -S amd-staging-drm-next-git (or use pamac with its GUI for every step), reboot, done.

IMHO, that's a lot safer than mixing packages from different distros with manual compiles and kernel installs. Everything this way is all from the same OS/package tree and handled with package managers (Antergos directly uses Arch repos with an Antergos repo that has some easy-mode packages).

I ran Debian Testing for years and updates would break it every month or two. I would not recommend that setup to anyone, ever. Especially so since it requires mixing in Ubuntu packages.

Antergos, OTOH, has been running flawlessly for 3 years now with both XFCE and Plasma desktops and my only issue has been the ZFS module building before SPL...running zfs 0.8.0 so that isn't a problem any more

My distribution uses even latester code...if that's something to brag about...

Comment

-

I live in Europe, so we get sucked for every euro cent we have...Originally posted by pal666 View Postlol, you missed last month or two? https://www.amazon.com/Radeon-Dual-F...70_&dpSrc=srch

No way we can get the card at that price here...

Only when US citizens stop buying those cards, the leftovers will drop the price in Europe...

Comment

Comment