Originally posted by blackiwid

View Post

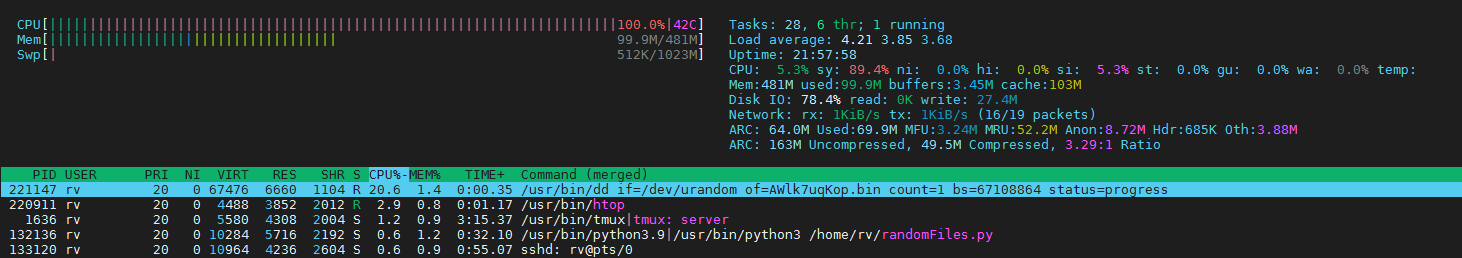

Also, ARC improving performance by caching data in RAM you aren't using is a good thing. If the kernel needs it for something else it will take it.

Comment