Originally posted by sophisticles

View Post

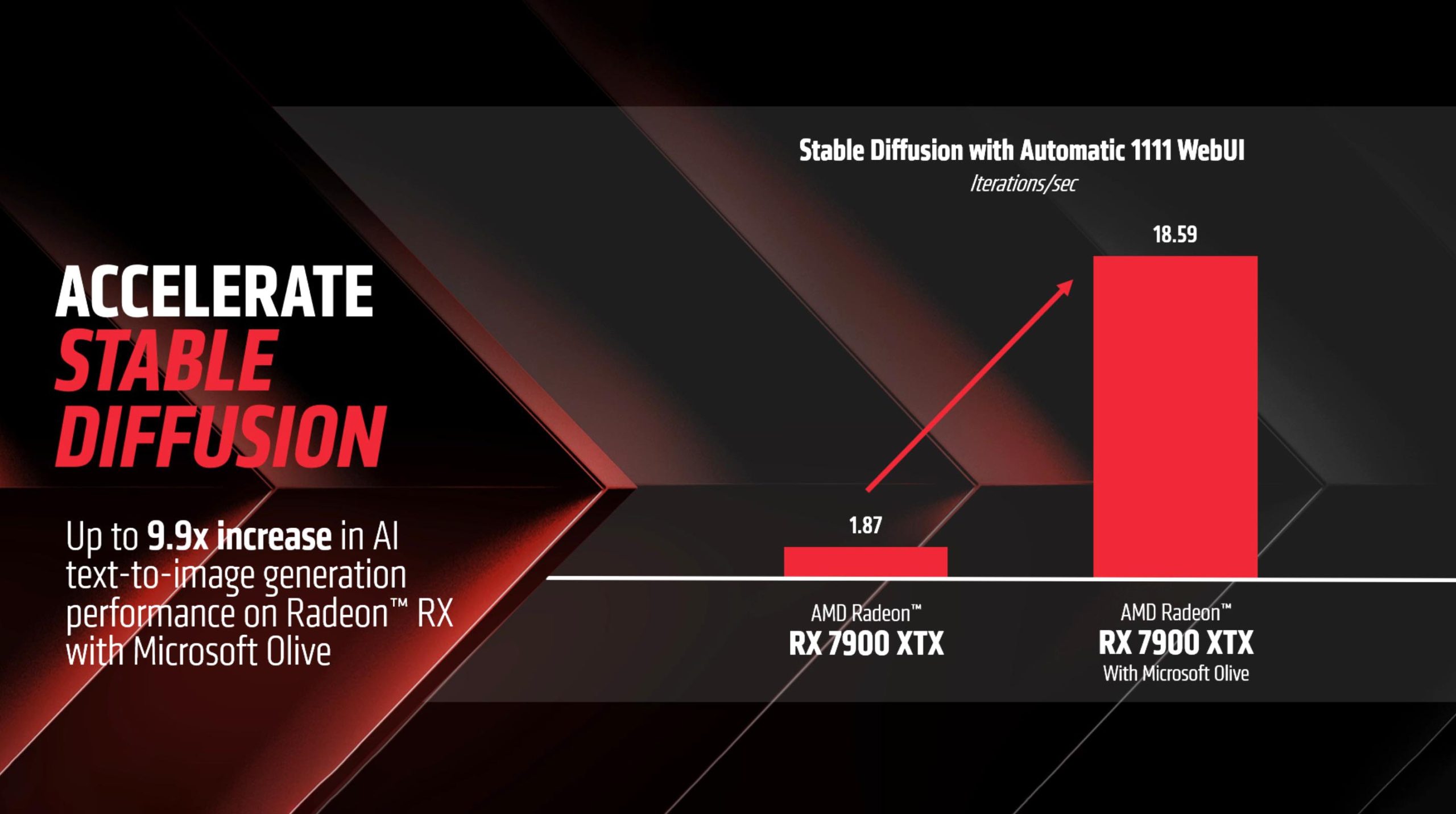

Opensourcing everything is foolish, only AMD must fix the bugs of their software.

LIbraries, drivers and frameworks need to be rock solid.

Comment