Originally posted by Beach

View Post

Announcement

Collapse

No announcement yet.

AMD Certifies PRO W7800 & RX 7900 GRE For ROCm, Officially Adds ONNX Runtime

Collapse

X

-

What do you mean imagined? They have AI accelerators(parallel matrix math units), we just haven't seen any proper benchmarks actually unitizing those AI accelerators. I do agree they should have official support for the entire RX 7000 series not just their high-end.Originally posted by sobrus View Post

I know, but ROCm 5 added official support for RDNA2. And where is it now? Where is support for gfx1030 that used to work fine for years?

It's rather obvious that ROCm 7 will drop official RDNA3 support. As soon as AMD gets tensor cores (real, not imagined), it will come to conclusion that only newest cards are good enough to have any form of support. Because they are THE cards.

So many generations of AMD GPUs never saw any real support, and AMD is commited to bringing support to all cards so much, and it improves ROCm so much, that ... nothing really changes. Empty promises, unusable software. Gaming is all that matters.

So much for AMD, I'm waiting for Intel now. Time to get real card that can do something more than gaming.

AMD's RDNA3 graphics IP is just around the corner, and we are hearing more information about the upcoming architecture. Historically, as GPUs advance, it is not unusual for companies to add dedicated hardware blocks to accelerate a specific task. Today, AMD engineers have updated the backend of the ...

AMD's RDNA3 graphics IP is just around the corner, and we are hearing more information about the upcoming architecture. Historically, as GPUs advance, it is not unusual for companies to add dedicated hardware blocks to accelerate a specific task. Today, AMD engineers have updated the backend of the ...

The Radeon RX 7900 XT GPU features the same RDNA™ 3 GPU architecture, comes with 20GB of fast on-board memory, and includes 168 AI accelerators, making it another great solution to accelerate workflows for ML training and inference on a local desktop.Last edited by WannaBeOCer; 15 February 2024, 06:13 PM.

- Likes 1

Comment

-

I know what AMD said, but for now, the only working WMMA inplementation is CDNA architecture. AMD said somewhere that RDNA3 has AI units butOriginally posted by WannaBeOCer View PostWhat do you mean imagined? They have AI accelerators(parallel matrix math units)

- to date I've seen no benchmark showing that they are enabled

- max theoretical FP performance of RDNA3 is exactly 2*RDNA2 (because RDNA3 is dual issue). I bet they would be happy to put higher value to compete with nVidia's 1321TOPS (~10x faster than 7900XTX), but apparently they can't.

Look what AMD is saying in the last link you've shared:

"AMD Radeon™ RX 7000 Series graphics cards are designed to accelerate advanced AI experiences. With over 2x stronger AI performance than the previous generation8 "

Well, my RX6800XT is also accelerating AI - PyTorch runs just fine. So I may say it's "designed to accelerate AI" (note they didn't say it's got any sort of tensor cores, nor the specs table says it - just compute and ray units like RDNA2)

And RX7000 is up to twice as fast. because it is dual issue. Not because it's got WMMA. If RX7000 had WMMA working, it would run circles around RX6000. This AMD sentence is misleading - technically they didn't lie, but let your imagination tell you it's got tensor cores, while it only means it's dual issue RDNA2.

RX7800XT and RX6800XT are more or less equal (and even older card is faster in math heavy tasks). New card has 3840 cores, old has 4608.

Clocks speeds are a bit higher on RX7000. It means that real life improvement over RDNA2 is around 20% or even less. Technically, it's "up to 2x", so AMD is correct again.

Don't buy promises, especially from AMD.

edit:

I've found specs table confirming RX7000 has some sort of "AI units":

But this doesn't change what I've written above, nor the fact that AMD says it's only "up to 2x" faster than previous generation.

So either the AI units are imagined, or bugged, or they just need some more years to enable them, maybe in ROCm 10 (supporting only Radeon RXT3950XTXT).Last edited by sobrus; 16 February 2024, 04:01 AM.

Comment

-

My Titan RTX is 2.5x faster using tensor cores compared to my Radeon VII when using FP16. Benchmarks I’ve seen of the RX 6900 XT showed similar performance using FP16 to my Radeon VII. So their claims seem accurate especially since their RX 7900 XTX only has 192 of the AI accelerators while my Titan RTX has 576 Tensor cores.Originally posted by sobrus View Post

I know what AMD said, but for now, the only working WMMA inplementation is CDNA architecture. AMD said somewhere that RDNA3 has AI units but

- to date I've seen no benchmark showing that they are enabled

- max theoretical FP performance of RDNA3 is exactly 2*RDNA2 (because RDNA3 is dual issue). I bet they would be happy to put higher value to compete with nVidia's 1321TOPS (~10x faster than 7900XTX), but apparently they can't.

Look what AMD is saying in the last link you've shared:

"AMD Radeon™ RX 7000 Series graphics cards are designed to accelerate advanced AI experiences. With over 2x stronger AI performance than the previous generation8 "

Well, my RX6800XT is also accelerating AI - PyTorch runs just fine. So I may say it's "designed to accelerate AI" (note they didn't say it's got any sort of tensor cores, nor the specs table says it - just compute and ray units like RDNA2)

And RX7000 is up to twice as fast. because it is dual issue. Not because it's got WMMA. If RX7000 had WMMA working, it would run circles around RX6000. This AMD sentence is misleading - technically they didn't lie, but let your imagination tell you it's got tensor cores, while it only means it's dual issue RDNA2.

RX7800XT and RX6800XT are more or less equal (and even older card is faster in math heavy tasks). New card has 3840 cores, old has 4608.

Clocks speeds are a bit higher on RX7000. It means that real life improvement over RDNA2 is around 20% or even less. Technically, it's "up to 2x", so AMD is correct again.

Don't buy promises, especially from AMD.

edit:

I've found specs table confirming RX7000 has some sort of "AI units":

But this doesn't change what I've written above, nor the fact that AMD says it's only "up to 2x" faster than previous generation.

So either the AI units are imagined, or bugged, or they just need some more years to enable them, maybe in ROCm 10 (supporting only Radeon RXT3950XTXT).

Here's a resnet50 benchmark I ran a while back on both my Radeon VII and Titan RTX using FP16.

Radeon VII

Step Img/sec total_loss

1 images/sec: 436.0 +/- 0.0 (jitter = 0.0) 7.875

10 images/sec: 435.7 +/- 0.2 (jitter = 0.6) 7.952

20 images/sec: 435.7 +/- 0.2 (jitter = 0.9) 7.956

30 images/sec: 435.3 +/- 0.2 (jitter = 0.9) 7.947

40 images/sec: 435.2 +/- 0.2 (jitter = 1.0) 7.958

50 images/sec: 435.2 +/- 0.2 (jitter = 0.8) 7.709

60 images/sec: 435.2 +/- 0.2 (jitter = 0.9) 7.898

70 images/sec: 435.2 +/- 0.1 (jitter = 0.8) 7.846

80 images/sec: 435.1 +/- 0.1 (jitter = 0.9) 7.977

90 images/sec: 435.2 +/- 0.1 (jitter = 0.8) 7.801

100 images/sec: 435.1 +/- 0.1 (jitter = 0.9) 7.782

----------------------------------------------------------------

total images/sec: 434.93

----------------------------------------------------------------Titan RTX

Step Img/sec total_loss

1 images/sec: 1127.1 +/- 0.0 (jitter = 0.0) 7.788

10 images/sec: 1119.9 +/- 3.7 (jitter = 7.1) 7.741

20 images/sec: 1122.1 +/- 2.6 (jitter = 9.3) 7.826

30 images/sec: 1121.3 +/- 2.1 (jitter = 5.0) 7.962

40 images/sec: 1121.6 +/- 1.9 (jitter = 5.6) 7.885

50 images/sec: 1119.3 +/- 1.7 (jitter = 8.4) 7.795

60 images/sec: 1117.8 +/- 1.6 (jitter = 9.5) 8.012

70 images/sec: 1116.1 +/- 1.6 (jitter = 12.6) 7.874

80 images/sec: 1115.2 +/- 1.4 (jitter = 13.9) 7.929

90 images/sec: 1114.7 +/- 1.5 (jitter = 13.8) 7.739

100 images/sec: 1114.1 +/- 1.4 (jitter = 14.1) 8.000

----------------------------------------------------------------

total images/sec: 1112.65

----------------------------------------------------------------Last edited by WannaBeOCer; 16 February 2024, 04:22 AM.

Comment

-

But what are the AI accelerators for, if they don't provide any significant performance boost? If you even usually don't mention them in GPU specs?

One may say that RDNA2 had AI accelerators too. My cards has 72CU, so maybe it's got 144 AI Units? Or rather 72?...

They do accelerate AI, despite not being tensor cores right?

Tensor cores are independent, they can process data in parallel to CUs. This doesn't seem again to be the case with RDNA3.

If RDNA3 had such capability, wouldn't it be nice idea to make use of it, let's say in FSR3? It doesn't seem to perform much better than RDNA2.

Why AMD isn't making use of all these sweet features?

What is more, RDNA2, at least some GPUs, has limited precision modes (as far as I remember, Microsoft confirmed it for series X GPU):

So, with INT4, my card should be 8x faster than with FP32, reaching 160TOPS. But in reality I've never seen anything faster than FP16, never ever.

Where are all the bells and whistles of AMD GPUs?

Same goes for Ray Tracing. AMD says it's vastly improved, but benchmark results doesn't seem to show any significant progress vs RDNA2. Of course higher RX7000 are fast cards, faster than any RDNA2, but still lags behing nvidia.

So, to sum up, AMD claims various nice things about their GPUs, but you just can't use all this power. Not even under Windows. In reality, RDNA3 performs just as good as dual issue RDNA2 would. Maybe even worse.Last edited by sobrus; 16 February 2024, 04:49 AM.

Comment

-

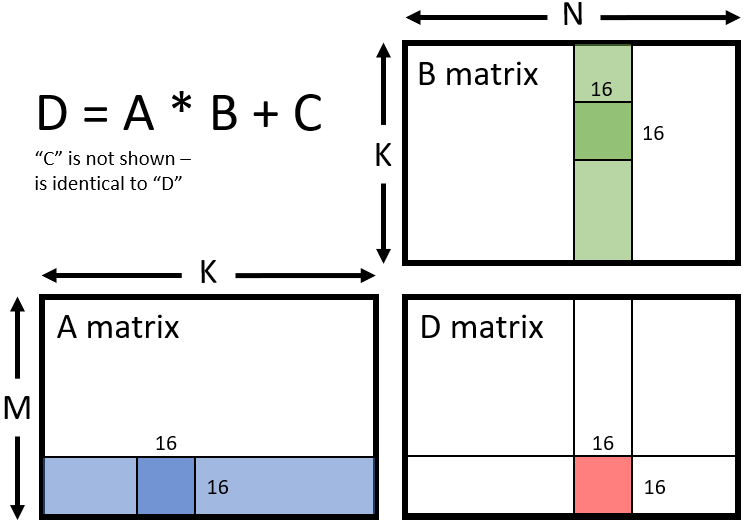

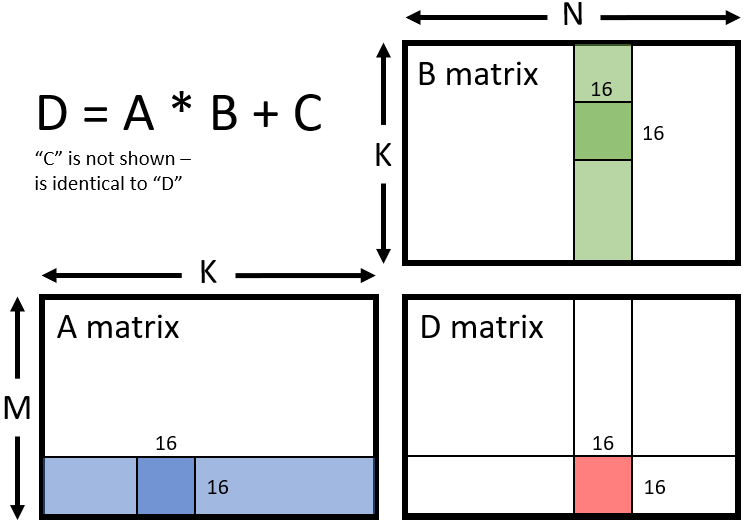

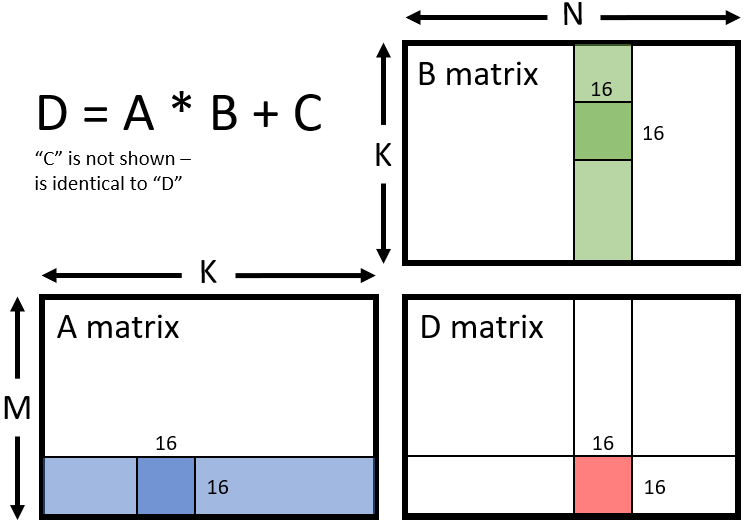

They're for machine learning and inference just like Nvidia's Tensor cores. As I mentioned earlier, AI accelerators are parallel matrix math units just like Tensor cores. Just have a different name because branding.Originally posted by sobrus View PostBut what are the AI accelerators for, if they don't provide any significant performance boost? If you even usually don't mention them in GPU specs?

One may say that RDNA2 had AI accelerators too. My cards has 72CU, so maybe it's got 144 AI Units? Or rather 72?...

They do accelerate AI, despite not being tensor cores right?

Tensor cores are independent, they can process data in parallel to CUs. This doesn't seem again to be the case with RDNA3.

If RDNA3 had such capability, wouldn't it be nice idea to make use of it, let's say in FSR3? It doesn't seem to perform much better than RDNA2.

Why AMD isn't making use of all these sweet features?

What is more, RDNA2, at least some GPUs, has limited precision modes (as far as I remember, Microsoft confirmed it for series X GPU):

So, with INT4, my card should be 8x faster than with FP32, reaching 160TOPS. But in reality I've never seen anything faster than FP16, never ever.

Where are all the bells and whistles of AMD GPUs?

Same goes for Ray Tracing. AMD says it's vastly improved, but benchmark results doesn't seem to show any significant progress vs RDNA2. Of course higher RX7000 are fast cards, faster than any RDNA2, but still lags behing nvidia.

So, to sum up, AMD claims various nice things about their GPUs, but you just can't use all this power. Not even under Windows. In reality, RDNA3 performs just as good as dual issue RDNA2 would. Maybe even worse.

Researchers and developers working with Machine Learning (ML) models and algorithms using PyTorch can now use AMD ROCm 5.7 on Ubuntu® Linux® to tap into the parallel computing power of the Radeon™ RX 7900 XTX and the Radeon™ PRO W7900 graphics cards which are based on the AMD RDNA™ 3 GPU architectur...

ACCELERATE MACHINE LEARNING ON YOUR DESKTOP- With today’s models easily exceeding the capabilities of standard hardware and software not designed for AI, ML engineers are looking for cost-effective solutions to develop and train their ML-powered applications. A local PC or workstation system with a Radeon 7900 series GPU presents a capable, yet affordable solution to address these growing workflow challenges thanks to large GPU memory sizes of 24GB and even 48GB.

- Radeon™ 7900 series GPUs built on the RDNA™ 3 GPU architecture now come with up to 192 AI accelerators and feature more than 2x higher AI performance per Compute Unit (CU)1 compared to the previous generation.

- Likes 1

Comment

-

This doesn't change anything.Originally posted by WannaBeOCer View Post

They're for machine learning and inference just like Nvidia's Tensor cores. As I mentioned earlier, AI accelerators are parallel matrix math units just like Tensor cores. Just have a different name because branding.

https://community.amd.com/t5/ai/amd-...on/ba-p/637756[/LIST]

"Radeon™ 7900 series GPUs built on the RDNA™ 3 GPU architecture now come with up to 192 AI accelerators and feature more than 2x higher AI performance per Compute Unit (CU)1 compared to the previous generation."

Again, RDNA3 CU is theoretically 2x faster than RDNA2 CU, because it is dual issue (same story as RTX2000 vs RTX3000).

It implies that there is no matrix unit at all.

Everything AMD says about accelerating using RDNA3 may as well apply to RDNA2. The only difference is that for AMD, AI Optimized GPU is always the newest one ONLY. Always only the newest and most expensive is the truest AI accelerator.

RDNA2 was said to have advanced AI acceleration too. See DirectML section here:

[Editor’s Note: Updated on 10/21 at 11AM to ensure it is now reflective of the capabilities across both of our next-gen Xbox consoles following the unveil of Xbox Series S.] As we enter a new generation of console gaming with Xbox Series X and Xbox Series S, we’ve made a number of technology advancements across […]

[Editor’s Note: Updated on 10/21 at 11AM to ensure it is now reflective of the capabilities across both of our next-gen Xbox consoles following the unveil of Xbox Series S.] As we enter a new generation of console gaming with Xbox Series X and Xbox Series S, we’ve made a number of technology advancements across […]

The problem is that these mythical AI capabilities are not exposed by any framework, and dropped/forgotten once newer AMD GPU is released.

edit:

OK I stand corrected

RDNA3 indeed seems to have WMMA:

This blog is a quick how-to guide for using the WMMA feature with our RDNA 3 GPU architecture using a Hello World example.

This blog is a quick how-to guide for using the WMMA feature with our RDNA 3 GPU architecture using a Hello World example.

And I've even found real life application that exceed's AMD's own performance claims:

RDNA3 may not be as bad as it seemed.

Last edited by sobrus; 16 February 2024, 08:00 AM.

- Likes 1

Comment

-

The 2x performance claim is for machine learning. Stable diffusion is an inference workload.Originally posted by sobrus View Post

This doesn't change anything.

"Radeon™ 7900 series GPUs built on the RDNA™ 3 GPU architecture now come with up to 192 AI accelerators and feature more than 2x higher AI performance per Compute Unit (CU)1 compared to the previous generation."

Again, RDNA3 CU is theoretically 2x faster than RDNA2 CU, because it is dual issue (same story as RTX2000 vs RTX3000).

It implies that there is no matrix unit at all.

Everything AMD says about accelerating using RDNA3 may as well apply to RDNA2. The only difference is that for AMD, AI Optimized GPU is always the newest one ONLY. Always only the newest and most expensive is the truest AI accelerator.

RDNA2 was said to have advanced AI acceleration too. See DirectML section here:

[Editor’s Note: Updated on 10/21 at 11AM to ensure it is now reflective of the capabilities across both of our next-gen Xbox consoles following the unveil of Xbox Series S.] As we enter a new generation of console gaming with Xbox Series X and Xbox Series S, we’ve made a number of technology advancements across […]

[Editor’s Note: Updated on 10/21 at 11AM to ensure it is now reflective of the capabilities across both of our next-gen Xbox consoles following the unveil of Xbox Series S.] As we enter a new generation of console gaming with Xbox Series X and Xbox Series S, we’ve made a number of technology advancements across […]

The problem is that these mythical AI capabilities are not exposed by any framework, and dropped/forgotten once newer AMD GPU is released.

edit:

OK I stand corrected

RDNA3 indeed seems to have WMMA:

This blog is a quick how-to guide for using the WMMA feature with our RDNA 3 GPU architecture using a Hello World example.

This blog is a quick how-to guide for using the WMMA feature with our RDNA 3 GPU architecture using a Hello World example.

And I've even found real life application that exceed's AMD's own performance claims:

RDNA3 may not be as bad as it seemed.

The Machine Intelligence Shader Autogen (MISA) library will soon release WMMA support to accelerate models like Resnet50 for a performance uplift of roughly 2x from RDNA 2

- Likes 1

Comment

-

I am pretty sure the lowest integer length in use in AI workloads are INT8 and not INT4... i do not know any inference mode implementation using int4...Originally posted by sobrus View PostBut what are the AI accelerators for, if they don't provide any significant performance boost? If you even usually don't mention them in GPU specs?

One may say that RDNA2 had AI accelerators too. My cards has 72CU, so maybe it's got 144 AI Units? Or rather 72?...

They do accelerate AI, despite not being tensor cores right?

Tensor cores are independent, they can process data in parallel to CUs. This doesn't seem again to be the case with RDNA3.

If RDNA3 had such capability, wouldn't it be nice idea to make use of it, let's say in FSR3? It doesn't seem to perform much better than RDNA2.

Why AMD isn't making use of all these sweet features?

What is more, RDNA2, at least some GPUs, has limited precision modes (as far as I remember, Microsoft confirmed it for series X GPU):

So, with INT4, my card should be 8x faster than with FP32, reaching 160TOPS. But in reality I've never seen anything faster than FP16, never ever.

Where are all the bells and whistles of AMD GPUs?

Same goes for Ray Tracing. AMD says it's vastly improved, but benchmark results doesn't seem to show any significant progress vs RDNA2. Of course higher RX7000 are fast cards, faster than any RDNA2, but still lags behing nvidia.

So, to sum up, AMD claims various nice things about their GPUs, but you just can't use all this power. Not even under Windows. In reality, RDNA3 performs just as good as dual issue RDNA2 would. Maybe even worse.

so it is nonsense to demant INT4 acceleration... what is in use is minifloat like 4FP 6FP 8FP but of course my Vega64 only support 16FP in hardware not any smaller one. also keep in mind that INT8 is not in use on GPUs they only use INT8 to accelerate inference mode on CPUs.

the only implementation that use any 4bit data formats INT4/4FP is Nvidias DLSS to calculate stuff who precision is not necessary.

by the way RDNA3 has 17% higher raytracing performance than RDNA2... so please stop spread lies.Phantom circuit Sequence Reducer Dyslexia

Comment

-

man your are genius in your negativity... really.Originally posted by sobrus View Postedit:

OK I stand corrected

RDNA3 indeed seems to have WMMA:

This blog is a quick how-to guide for using the WMMA feature with our RDNA 3 GPU architecture using a Hello World example.

This blog is a quick how-to guide for using the WMMA feature with our RDNA 3 GPU architecture using a Hello World example.

And I've even found real life application that exceed's AMD's own performance claims:

RDNA3 may not be as bad as it seemed.Phantom circuit Sequence Reducer Dyslexia

Comment

Comment