Originally posted by Panix

View Post

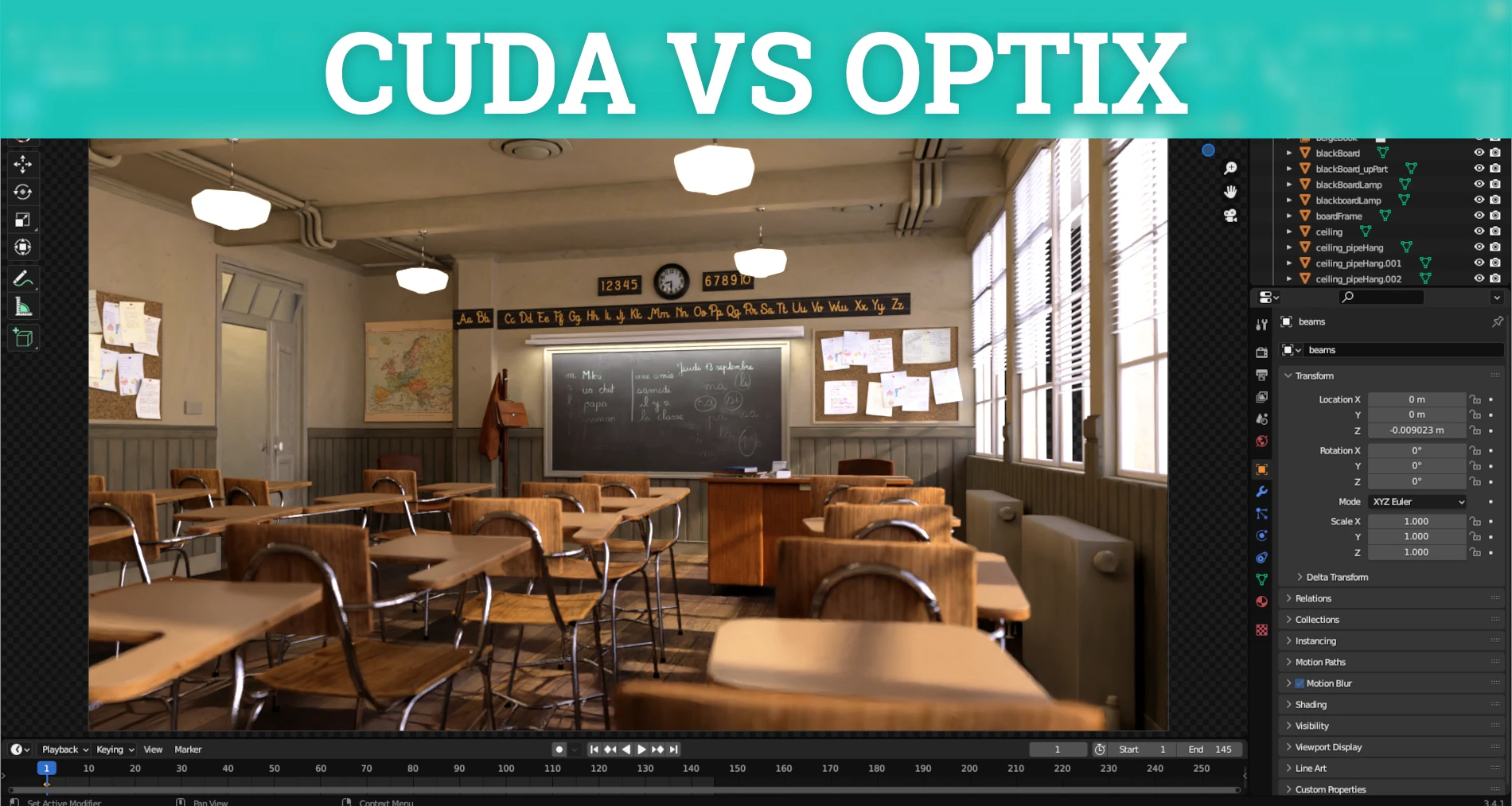

You can ignore the fact that Renders with Optix look like ass all you want.

You do -NOT- get better performance, actually what you get is much worse quality.

EDIT: And that has pretty much always been nVidia's goto modus operandi. On pretty much every generation of every card in every product line on every API. nVidia hardware produces worse quality output. It -ALWAYS- has. Optix takes that their modus operandi to an extreme..... Again...

EDIT: When nVidia can't win they cheat...

Comment