Originally posted by juno

View Post

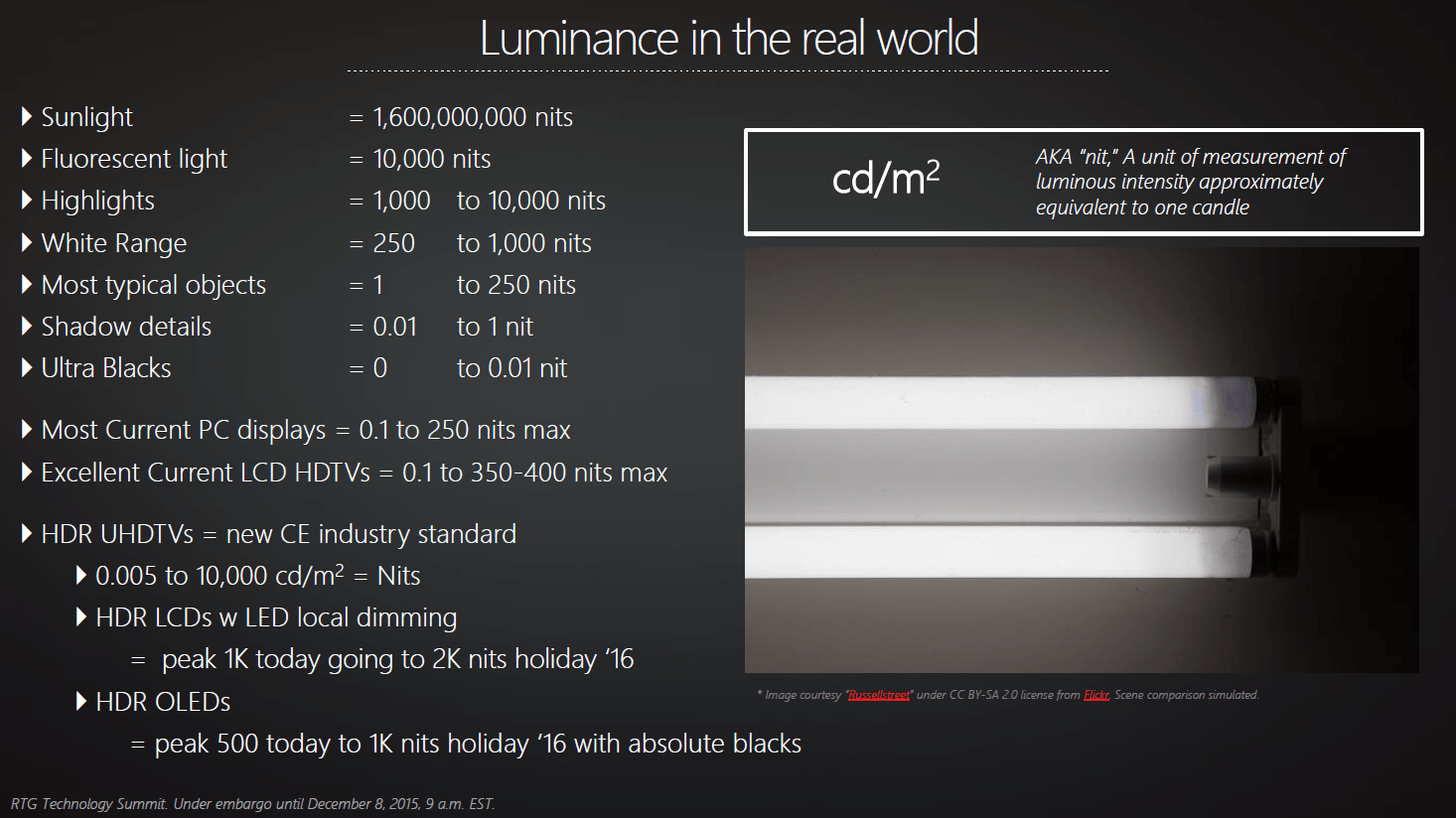

And sorry HDR monitors are not emerging, they have been around for a long time, they are characterized by their 10bit color-space.

Like when we talk dynamic range on audio we talk 16bit vs higher bit precisions. The actually volume the speakers can play is completely irrelevant. It doesn't become more HDR audio just because your speakers can play at stadium levels.

Comment