Phoronix: Intel Xeon Max Performance Delivers A Powerful Combination With AMX + HBM2e

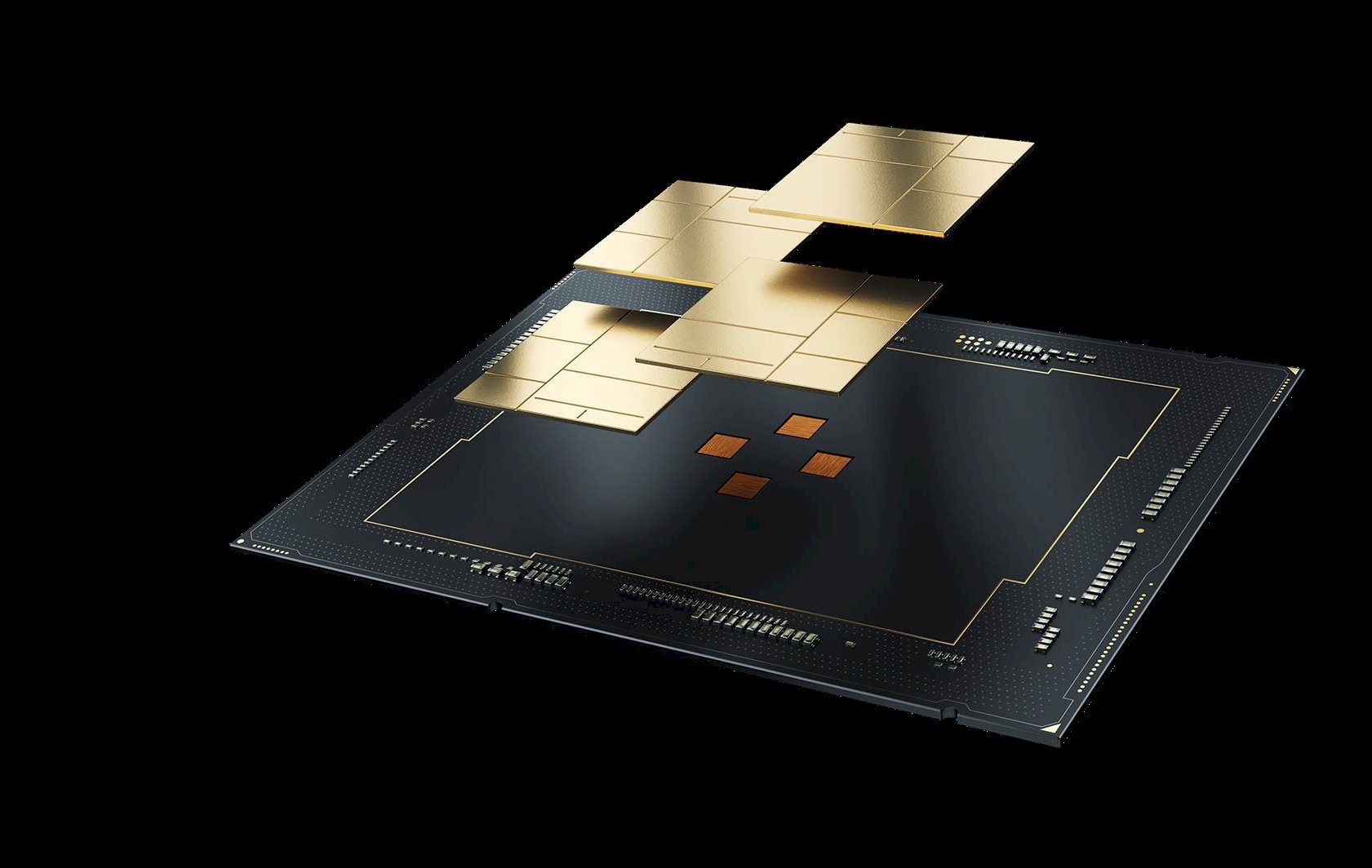

The Intel Xeon Max 9480 flagship Sapphire Rapids CPU with HBM2e memory tops out at 56 cores / 112 threads, so how can that compete with the latest AMD EPYC processors hitting 96 cores for Genoa (or 120 cores with the forthcoming Bergamo)? Besides the on-package HBM2e that is unique to the Xeon Max family, the other ace that Xeon Max holds with the rest of the Sapphire Rapids line-up is support for the Advanced Matrix Extensions (AMX). In today's benchmarks of the Intel Xeon Max performance is precisely showing the impact of how HBM2e and AMX in order to compete -- and outperform -- AMD's EPYC 9554 and 9654 processors in AI workloads when effectively leveraging AMX and the onboard HBM2e memory.

The Intel Xeon Max 9480 flagship Sapphire Rapids CPU with HBM2e memory tops out at 56 cores / 112 threads, so how can that compete with the latest AMD EPYC processors hitting 96 cores for Genoa (or 120 cores with the forthcoming Bergamo)? Besides the on-package HBM2e that is unique to the Xeon Max family, the other ace that Xeon Max holds with the rest of the Sapphire Rapids line-up is support for the Advanced Matrix Extensions (AMX). In today's benchmarks of the Intel Xeon Max performance is precisely showing the impact of how HBM2e and AMX in order to compete -- and outperform -- AMD's EPYC 9554 and 9654 processors in AI workloads when effectively leveraging AMX and the onboard HBM2e memory.

Comment