Originally posted by ms178

View Post

Announcement

Collapse

No announcement yet.

Intel Xeon Max 9480/9468 Show Significant Uplift In HPC & AI Workloads With HBM2e

Collapse

X

-

But will you actually be able to rent one? Much less buy one?Originally posted by ms178 View Post

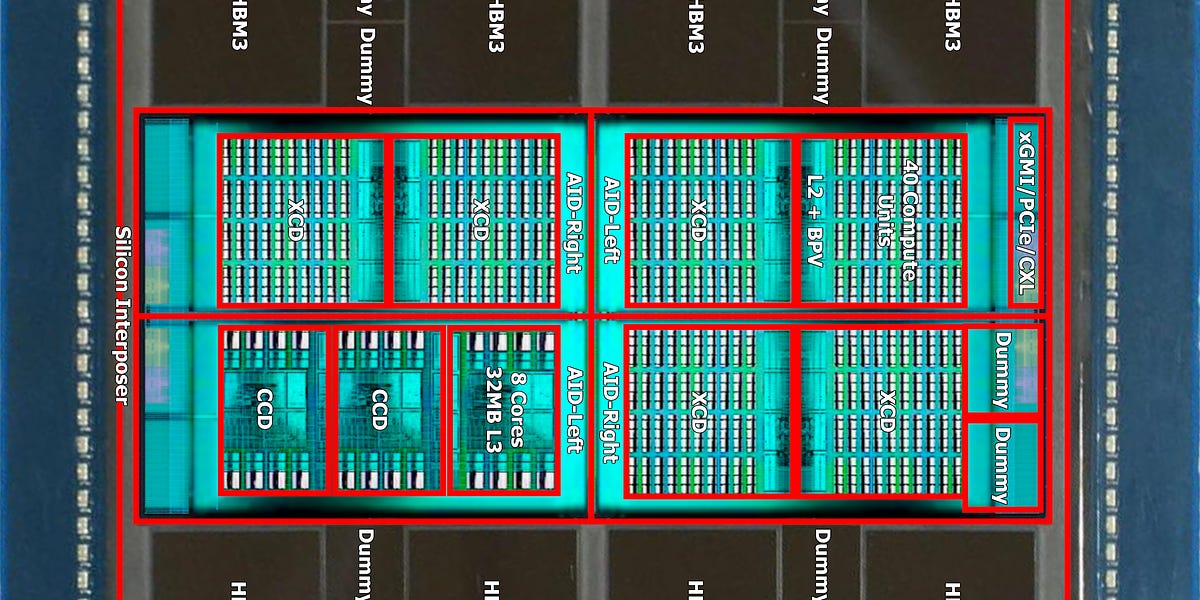

Well, back in 2012, I hoped for a big server APU with HBM and it is interesting in itself that the idea took so long to materialize in hardware (and that Intel is beating AMD to market with that concept). I am also thrilled what AMD has to offer with the MI300A and which one is better product in the end. If I remember correctly IBM was lobbying the industry to move into a different direction with their memory architecture that also supports GDDR and non-volatile sticks used as DIMMs. But I am not following the HPC/AI market that closely.

It seems the MI300A in particular is aimed at mega HPC clusters, and that cloud providers will be buying the GPU only variant... Or possibly a smaller PCIe variant:

AMD MI300 – Taming The Hype – AI Performance, Volume Ramp, Customers, Cost, IO, Networking, SoftwareAmazing engineering, but what of the path to market?

AMD MI300 – Taming The Hype – AI Performance, Volume Ramp, Customers, Cost, IO, Networking, SoftwareAmazing engineering, but what of the path to market?

- Likes 1

Comment

-

-

Thanks for the link! I also briefly watched the AI podcast of Dr. Ian Cutress talking about some contenders. I also doubt we will see an analog to the MI300A in the consumer space (even for enthusiasts) anytime soon. But that concept might also work for HEDT products later on if its costs can be brought down further.Originally posted by brucethemoose View Post

But will you actually be able to rent one? Much less buy one?

It seems the MI300A in particular is aimed at mega HPC clusters, and that cloud providers will be buying the GPU only variant... Or possibly a smaller PCIe variant:

https://www.semianalysis.com/p/amd-m...ai-performance

Comment

-

Anandtech went to shit without him & Andrei. No more SPEC2017, no more server CPU reviews, and no more phone SoC deep dives. Now, even when Anandtech manages to do a CPU review, we don't get the same quality or coverage as before - no P vs. E performance or efficiency comparisons on Raptor Lake, for instance.Originally posted by ms178 View PostI also briefly watched the AI podcast of Dr. Ian Cutress talking about some contenders.

I can't deal with youtube, though. It's a shame print journalism no longer seems to pay the bills.

At least RDNA3 has matrix cores and 384-bit memory bus. Not to mention like 5.2 TB/s from its L3 cache chiplets. Its main bottleneck is just too few matrix cores.Originally posted by ms178 View PostI also doubt we will see an analog to the MI300A in the consumer space (even for enthusiasts) anytime soon.

I also think we could see the return of HBM to consumer GPUs, before too long. Prices need to stabilize, first.

- Likes 1

Comment

-

No way. HBM is going to be sucked up by server AI chips like they are vacuums unless production volume changes drastically.Originally posted by coder View PostAnandtech went to shit without him & Andrei. No more SPEC2017, no more server CPU reviews, and no more phone SoC deep dives. Now, even when Anandtech manages to do a CPU review, we don't get the same quality or coverage as before - no P vs. E performance or efficiency comparisons on Raptor Lake, for instance.

I can't deal with youtube, though. It's a shame print journalism no longer seems to pay the bills.

At least RDNA3 has matrix cores and 384-bit memory bus. Not to mention like 5.2 TB/s from its L3 cache chiplets. Its main bottleneck is just too few matrix cores.

I also think we could see the return of HBM to consumer GPUs, before too long. Prices need to stabilize, first.

Besides, GDDRX gives more capacity/$, which is really the key to desktop AI capability these days. I guarantee Arc A770s would be flying off the shelves if they had a 32GB variant.Last edited by brucethemoose; 03 July 2023, 11:43 PM.

Comment

-

I think the main difference is that HBM requires an additional package step. TSMC juts announced it's building another facility to do that, due to the demand from AI chips.Originally posted by brucethemoose View PostNo way. HBM is going to be sucked up by server AI chips like they are vacuums unless production volume changes drastically.

As for the HBM dies themselves, I don't see why they're any different. It's just DRAM. You can fab it on the same production lines as other types.

Only due to the current supply/demand situation. Back in 2019, Radeon VII shipped with 16 GiB of HBM2 for just $700, and it was also the first GPU on TSMC N7.Originally posted by brucethemoose View PostBesides, GDDRX gives more capacity/$, which is really the key to desktop AI capability these days.

- Likes 2

Comment

Comment