Originally posted by stormcrow

View Post

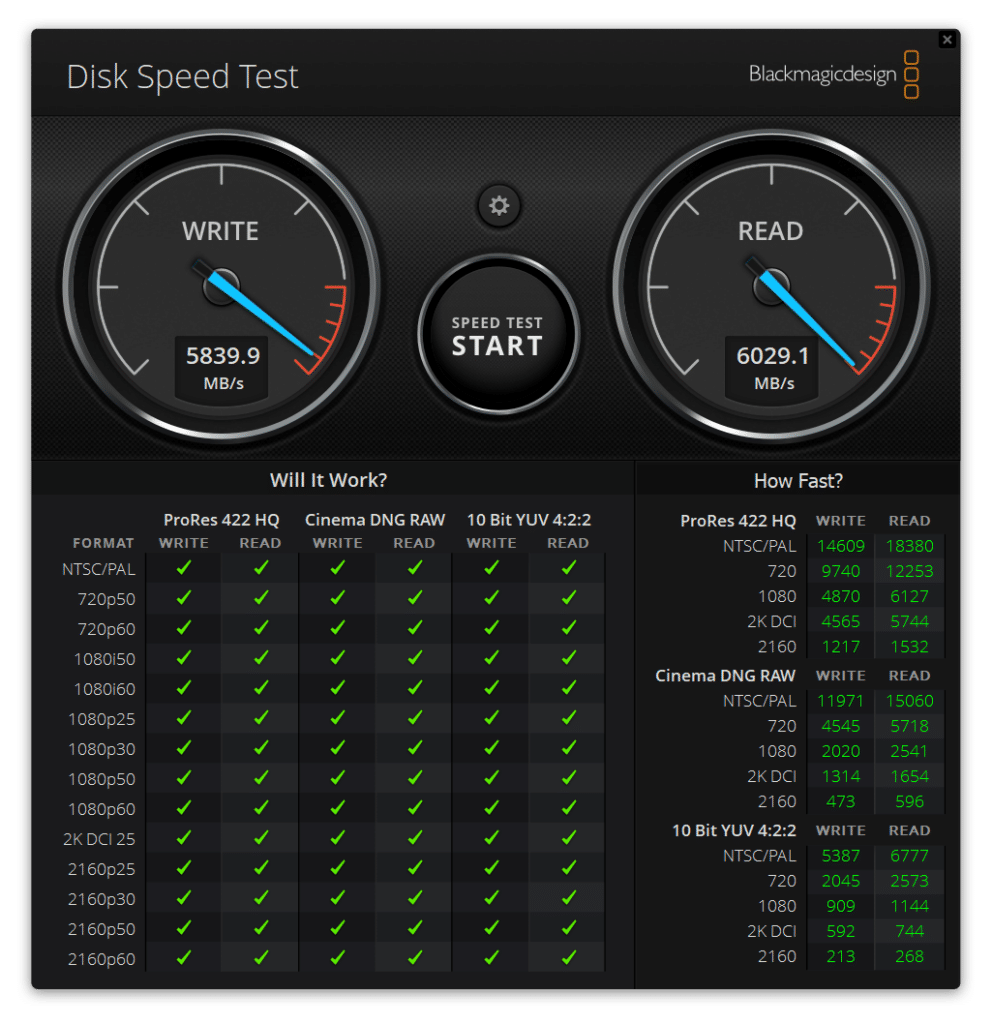

Black Magic Design provides a Speed Test tool that you can use to determine whether your SSD is fast enough for various formats and resolutions.

I think it's included in their "Desktop Video" download package, which is available for Mac, Windows, or Linux:

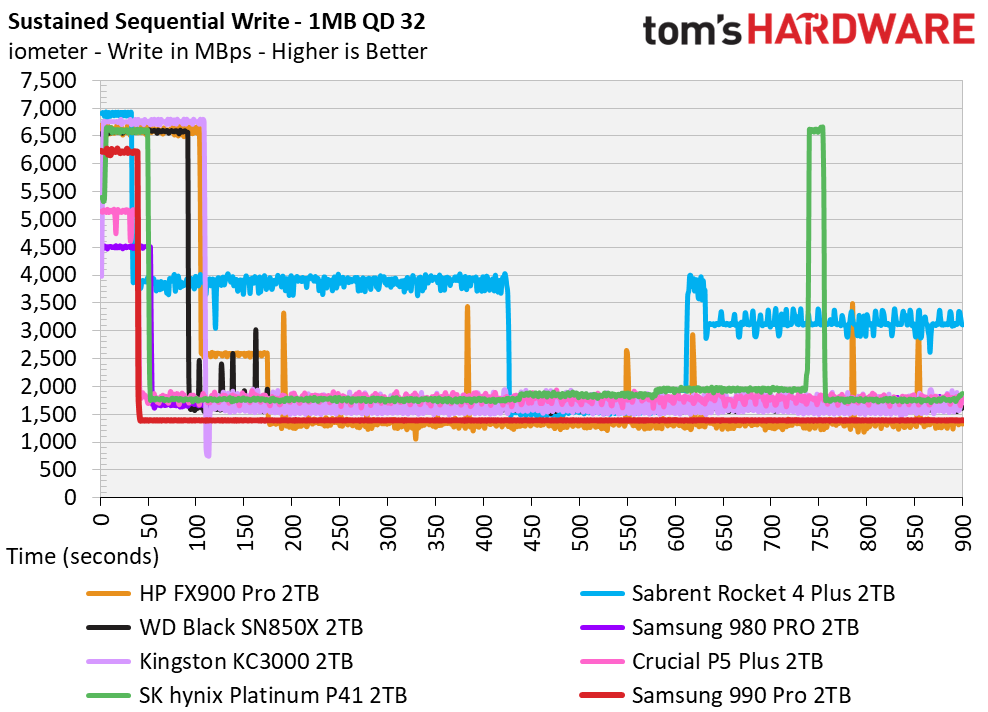

Here's the result that Storage Review got for the P44, on Windows 11:

https://www.storagereview.com/review...ssd-review-2tb

Ah. it's a pain with archival and backups sometimes.

Ah. it's a pain with archival and backups sometimes.

Comment