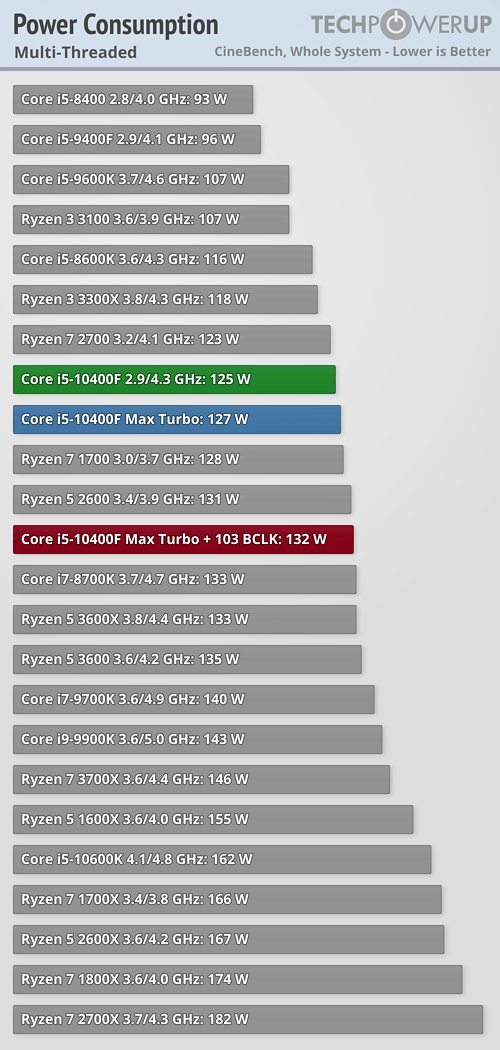

Is an Intel fanboy actually trying to argue that Intel's chips are better at power use?

Wow, somebody has lost their mind.

Stick to arguing that single-threaded performance is all that matters if you want anyone to take you seriously.

Wow, somebody has lost their mind.

Stick to arguing that single-threaded performance is all that matters if you want anyone to take you seriously.

. I care about value for the money and my use cases.

. I care about value for the money and my use cases.

Comment