Originally posted by andre30correia

View Post

Not for the model.

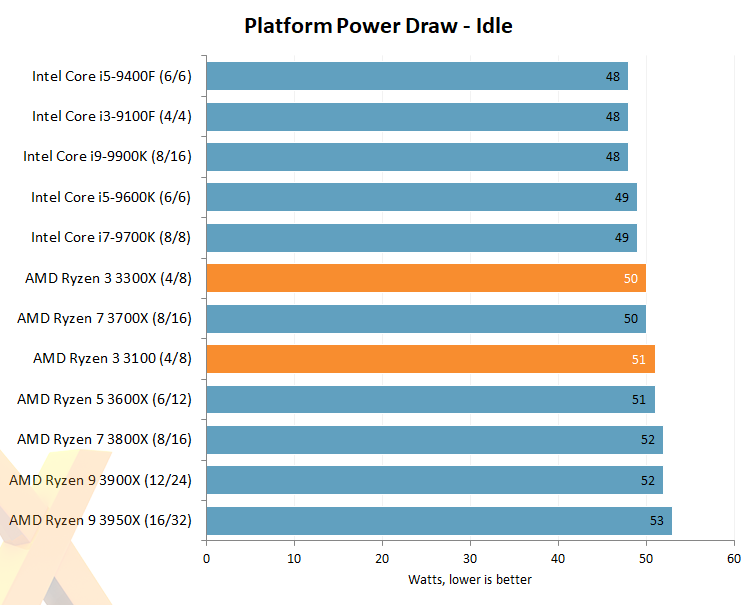

You can see the difference between 3300X and 3600X is around 10%, maybe 15%. The 3600 might only be 5-7% more powerful than the 3300X. It doesn't justify a 50€ difference in my eyes (will probably be more like 135-140€ in Europe). And I'd rather spend that money on better RAM or a better AM4 motherboard instead. I could probably get a CPU+16 GB DDR4 3200 RAM+B450M MB for around 250-270€ with the Ryzen 3300X. This is unbeatable price for that quality. And for 3x faster performance over my current setup (close to the A10-7870K in the test).

Comment