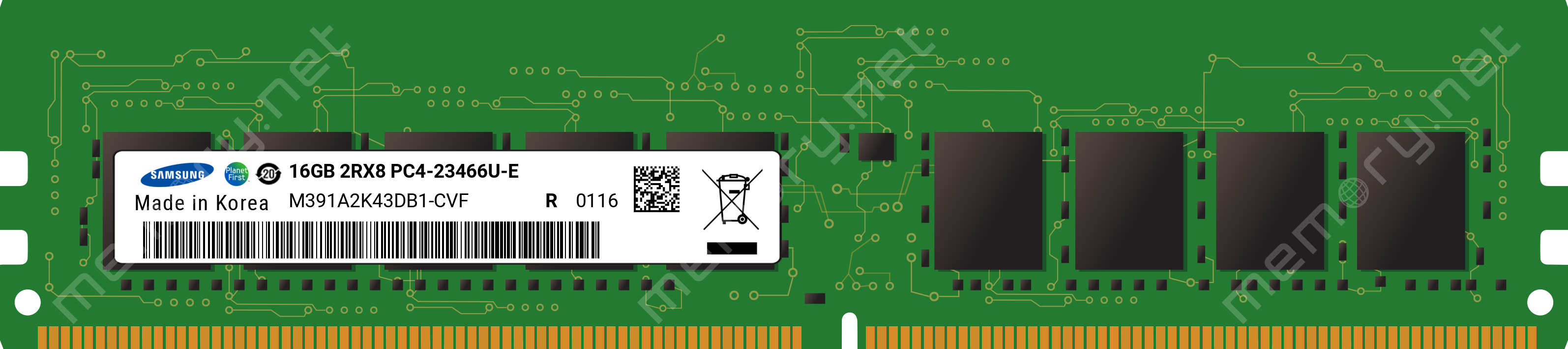

This is the fastest I have found so far :

Depending on the final setup I will go for and what goes after the 7th... I may (try to) get those.

Announcement

Collapse

No announcement yet.

The ECC DDR4 RAM Overclocking Potential With AMD Threadripper On Linux

Collapse

X

-

Happy to take part in any conspiracy to get faster ECC! I want 3200Mhz+ for a Ryzen rig.

Leave a comment:

-

Actually, sometimes even having the ATX power cable too close to the ram modules can greatly increase the probability of memory errors.Originally posted by Weasel View PostCosmic Rays and a million other quantum effects that can flip bits.

ECC was not intended for fixing faulty hardware / RAM. But to correct random errors. Like it or not, random errors happen, and it has nothing to do with the quality of the RAM module, but with external factors (cosmic rays) or quantum effects.

It may not have been the intent, but ECC is pretty much the only thing that can timely save you from a gradually dying memory stick. I've had this problem before and it corrupted hundreds of gigs of data for me. The thing is that the failure is initially completely invisible, and by the time it becomes evident that there is a problem, you can have days or even weeks of data corruption. In my case there was this single application that was randomly freezing, and nothing else. At first I though the app had a data corruption in its binaries, but the crashes persisted even after reinstallation. Then I thought it was a bug in the application, as again, nothing else in the system showed any evidence of a hardware problem.

Eventually, after about a week or so, the system got to a BSOD, then I ran memtest and found a slew of errors, one of the 4 modules has failed. Then it was huge pain to figure out how much and what data was affected, so it can be reproduced accurately.

And it was then that I said to myself "no more non-ecc memory for anything remotely important". I've had run-ins with single bit errors before, which are more common than people expect, but those were minor nuisances compared to gradually dying ram modules.

Also, keep in mind now that dram chips got even smaller, cosmic rays are gonna wreck havoc on those, as high energy particles will affect more adjacent memory cells, so impact events will result in more than 1 corrupted bit most of the time. Newer generation ecc ram is almost powerless against most of the cosmic ray flavors, and it will take some design changes to work around that, like say on module redundancy like raid 1, or a memory layout in which physically adjacent cells are not logically adjacent, both with significant overheads that will inevitably constitute a hefty price premium and reduced performance or efficiency.

Leave a comment:

-

Cosmic Rays and a million other quantum effects that can flip bits.Originally posted by schmidtbag View PostYes... and where do you think those errors come from?

ECC was not intended for fixing faulty hardware / RAM. But to correct random errors. Like it or not, random errors happen, and it has nothing to do with the quality of the RAM module, but with external factors (cosmic rays) or quantum effects.

Technically, to the hardware, a "programmer error" is a "feature" (quite literally) so it would never be able to correct this.Originally posted by schmidtbag View PostIf it's due to a programmer error

Leave a comment:

-

Yeah.... that's not how multi-million (or billion) dollar companies work. Such a company risking such an experiment would be idiotic, not to mention, completely cripple their support with the hardware manufacturers.Originally posted by Weasel View PostI don't see what that has to do with anything. If it crashes due to overclock, reduce the overclock in the first place, or be at ease knowing it wasn't a silent data corruption instead of the crash, which is much worse.

There's a reason why overclocking is so limited (or usually, non-existent) on servers.

When it comes to high-end workstations, companies prefer things done correctly the first time around rather slightly faster with a higher chance of problems (that's not necessarily specific to hardware issues, just problems in general).

Yes... and where do you think those errors come from? If it's due to a programmer error, that's completely irrelevant to this discussion, because it'll happen regardless of overclocking. Therefore, it's due to hardware. Therefore, since the context here is RAM overclocking, if you have freezing issues (and assuming RAM is the only thing that's difference vs a stable system), it's because the RAM's data integrity is compromised.No, these crashes I refer to are done on purpose by the kernel when they detect such an error, and crash the respective application having that page (or just mark the page invalid, crash when accessed, depends really). It can also shut down the PC, it really depends.

Such anomalies more often than not are from people meddling with the hardware in ways they're not supposed to. See the pattern here?It's no different than your motherboard shutting the computer down when it detects an anomaly. You really would NOT want to have it keep running in that state, so a crash is the right thing to do. A crash on purpose.

No crashing is good crashing. I agree and take your point that crashing on purpose is objectively better than silent corruption, but crashing (especially due to hardware issues) is not as trivial as you're making it out to be. It doesn't matter that much for the average desktop PC user, which is why we don't tend to use ECC RAM. Unless a company has a fully redundant server room (which is obviously desirable but woefully expensive), any downtime can be a major financial loss.Note that non-ECC memory can still detect single-bit errors (but not correct them) which leads to the good crash-on-purpose safety.Last edited by schmidtbag; 28 December 2018, 10:27 AM.

Leave a comment:

-

I don't see what that has to do with anything. If it crashes due to overclock, reduce the overclock in the first place, or be at ease knowing it wasn't a silent data corruption instead of the crash, which is much worse.Originally posted by schmidtbag View PostTell that to mainframe admins.

No, these crashes I refer to are done on purpose by the kernel when they detect such an error, and crash the respective application having that page (or just mark the page invalid, crash when accessed, depends really). It can also shut down the PC, it really depends.Originally posted by schmidtbag View PostDo you not realize that crashes are often caused by poor data integrity?

It's no different than your motherboard shutting the computer down when it detects an anomaly. You really would NOT want to have it keep running in that state, so a crash is the right thing to do. A crash on purpose.

Note that non-ECC memory can still detect single-bit errors (but not correct them) which leads to the good crash-on-purpose safety.

Leave a comment:

-

Tell that to mainframe admins.Originally posted by Weasel View PostLegit nobody cares about crashes. You have quite an obsession with them. They're trivial.

Do you not realize that crashes are often caused by poor data integrity?The real issue is data integrity and silent data corruption. ECC can detect two-bit errors, and that's the most important thing, they would be completely silent on a non-ECC system.

Leave a comment:

-

Legit nobody cares about crashes. You have quite an obsession with them. They're trivial.Originally posted by schmidtbag View PostSince you have no proof of silicon quality at purchase, the ECC module could very easily end up getting enough errors to cause an occasional system crash on a non-ECC module.

The real issue is data integrity and silent data corruption. ECC can detect two-bit errors, and that's the most important thing, they would be completely silent on a non-ECC system.

Overclocking or not, the ability for it to detect these is the most important, by far, even if the system crashes. Crashing on a two bit error is much better than silent corruption. And that's just as important when you overclock anything.

- Likes 1

Leave a comment:

-

It's true, that would have been better. The only 2666 Mhz B-Die ECC DIMMs I found were 8 gigs each, which wasn't enough. I want four slots free for future expansion. We do what we can. I think you're seeing my point now though. It's a fairly niche situation I'm in, but I think I made the right call.

It's a fairly niche situation I'm in, but I think I made the right call.

Leave a comment:

-

I agree, but you can't reliably say the same of ECC @ 3.2 overclocked vs non-ECC @ 3.2 stock. ECC isn't magic.Originally posted by MaxToTheMax View PostECC @ 2933 is less risky than non-ECC @ 2933 because it can correct errors. The stock clocks aren't magic.

I can totally see how 2.1 would be insufficient, and in a scenario where data integrity is important, I would agree that ECC overclocked to 3.0 would be a better option than 3GHz non-ECC, especially with timing adjustments. Though, I you have significantly increased your risks by doing a roughly 0.9GHz jump. Statistically, 2.6 to 3.0 would've yielded more reliable results.If you look at my performance results, 2133 Mhz RAM was strangling my CPU, and 2933 Mzh is a whole lot faster. So the performance is good enough, and the stability is superior to non-ECC at an equivalent or higher (or lower) clock. So I achieved both of my goals, albiet at significant cost. As opposed no protection against bit errors, which will lead to instability on a system with this much RAM. On a high-uptime 24/7 system with this much memory, ECC is pretty much mandatory, but 2133 Mhz RAM is unacceptable from a performance standpoint. The solution is overclocking.

Leave a comment:

Leave a comment: