Llamafile 0.8.14 Introduces New CLI Chatbot Interface

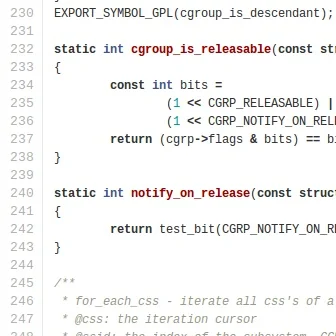

Llamafile 0.8.14 released overnight for this open-source code for easing large language model deployments. With the Llamafile 0.8.14 release there is a new CLI chatbot interface. This new CLI chatbot interface supports multi-line input, syntax highlighting for Python / C / C++ / Java / JavaScript code, and a variety of other features. This new chatbot interface is the default mode of operation when running Llamafile files if not specifying any alternative arguments. This chatbot was inspired by ollama.

Some of the other Llamafile 0.8.14 changes include using the BF16 KV cache for faster performance, always favoring FP16 arithmetic within tinyBLAS, llamafile-bench support for GPUs, and a variety of other changes.

Downloads and more details on the Llamafile 0.8.14 release via GitHub.

6 Comments