Phoronix: Gneural Network: GNU Gets Into Programmable Neural Networks

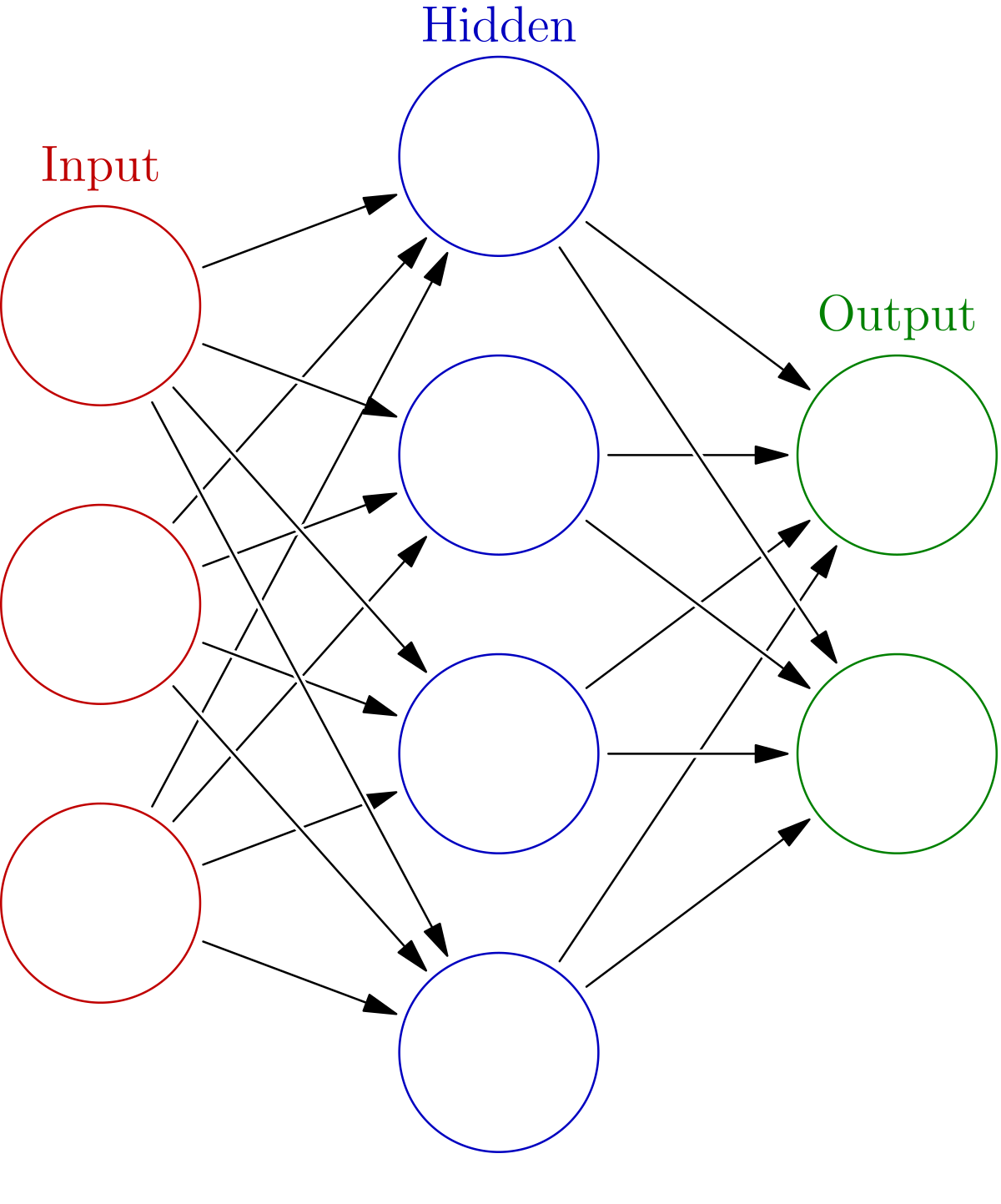

The inaugural release of Gneural Network is now available, a new GNU Project to implement programmable neural networks...

The inaugural release of Gneural Network is now available, a new GNU Project to implement programmable neural networks...

Comment