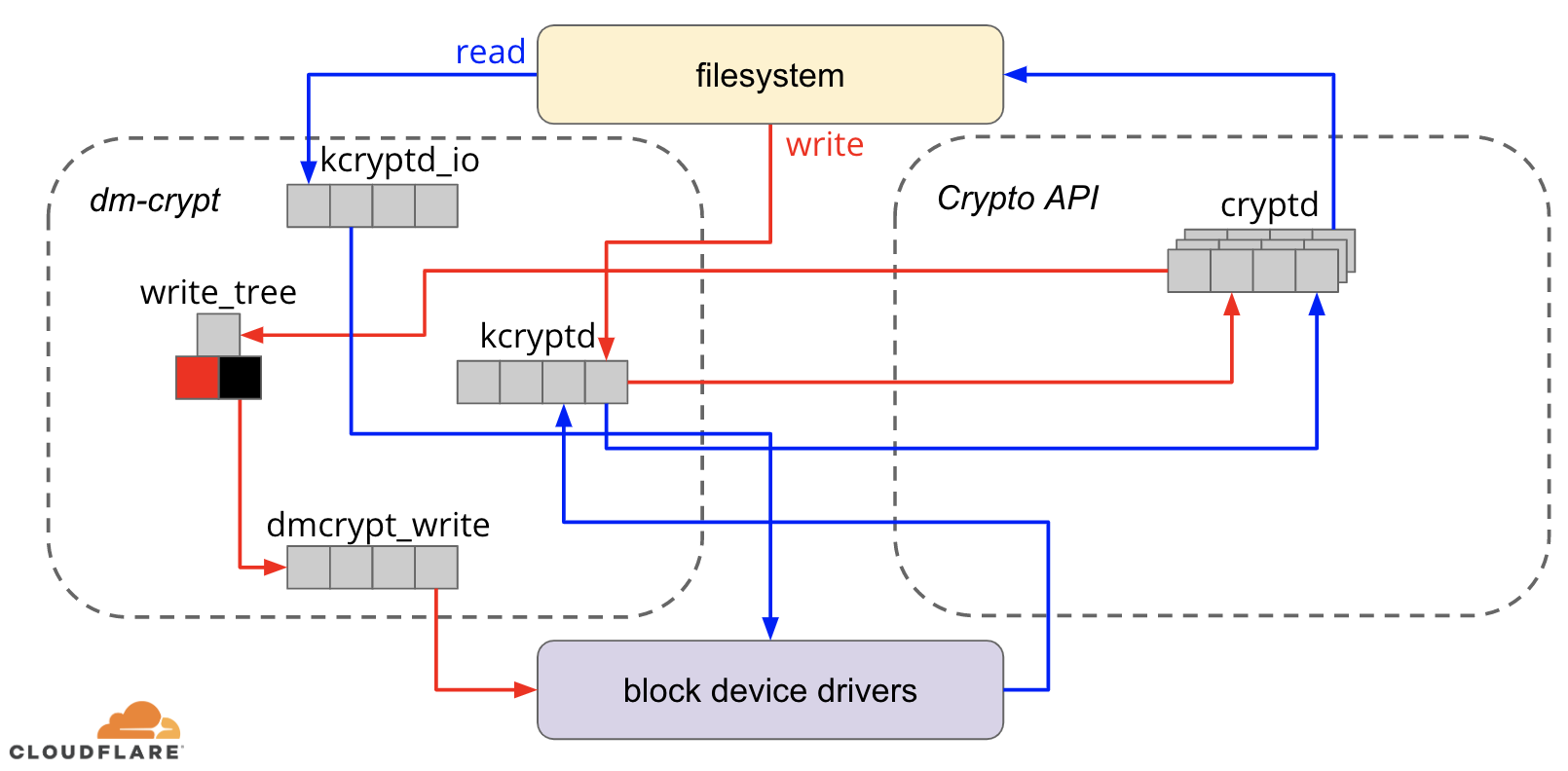

The thing I was hoping to see in the cloudflare blog post, but it seems they skipped, was what would be the effect of reducing, but not necessarily eliminating the number of async queuing layers. The dm-crypt queuing code was written when the Crypto API was still synchronous. The Crypto API has been updated to be asynchronous by adding its own internal queuing.

What amount of benefit would be realized by just removing the queuing in the dm-crypt code, instead of removing it in both layers?

What amount of benefit would be realized by just removing the queuing in the dm-crypt code, instead of removing it in both layers?

Comment