Originally posted by Serafean

View Post

Announcement

Collapse

No announcement yet.

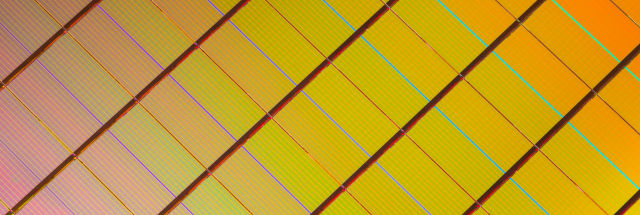

Intel Optane Memory Now Available

Collapse

X

-

Maybe it removes or bypasses the proprietary wear-levelling nonsense. Would be nice if we could get raw SSDs without implicit wear-levelling.Originally posted by tuke81 View Post

Well latency is down compared to ssds, those 4k results are great. But yeah optane memory is quite uninteresting, maybe one might try to replace usual tmpfs tweaks if it fairs on them. But really just waiting that third product stack from intel(Intel® Optane™ Solid State Drives for Consumers).

Comment

-

It would save so much on complex firmware, and likely improve performance as well. Currently you have got a mini-OS in the SSD controller making it look just like a hard drive, so it can be accessed by hard-drive-centric generic OS* drivers to implement a hard-drive-centric filesystem on top. Linux already includes filesystems that were designed from the ground up to work directly with the vagaries of SSDs, without the help of such crutches. But we don’t get much chance to use them.Originally posted by edgmnt View Post

Would be nice if we could get raw SSDs without implicit wear-levelling.

*Well, Windows drivers, anyway.

Comment

-

Well, not exactly. Sure, there are SSD-friendly filesystems which reduce wear, but I don't think they completely replace wear-levelling. As far as I'm aware, there's no block device or filesystem in Linux which tracks wear or attempts to remap worn blocks. Even using something like NILFS will eventually result in silent data loss or corruption in absence of wear-levelling.Originally posted by ldo17 View PostLinux already includes filesystems that were designed from the ground up to work directly with the vagaries of SSDs, without the help of such crutches.

Comment

-

Linux has filesystems suitable for raw flash, like jffs2 (older) and UBI/Ubifs (newer, UBI is actually a container for filesystems like ubifs or raw files that still offers bad block management) that do wear-leveling and are able to deal with dying/dead flash cells in a chip. These are in production since a long time in embedded devices.Originally posted by edgmnt View PostWell, not exactly. Sure, there are SSD-friendly filesystems which reduce wear, but I don't think they completely replace wear-levelling. As far as I'm aware, there's no block device or filesystem in Linux which tracks wear or attempts to remap worn blocks. Even using something like NILFS will eventually result in silent data loss or corruption in absence of wear-levelling.

Raw flash is NOT a block device and has no smarts on its own as there is no controller, the CPU is talking directly with NAND or with NOR chips, writing or erasing cells at a specific address directly. So it's the CPU that must use a filesystem that is aware of writes to each cell, and able to deal with cells that eventually die and are marked as bad.

Traditional filesystems for raw flash are probably going to perform like total crap on a FTL-less SSD as they are not designed for multi-chip environments (most embedded devices have ONE, at most TWO flash chips).

There is an initiative for supporting "Open-channel SSDs" that are dumber NVMe SSD where the parts requiring the most smarts are dealt with by the system's CPU, while the controller deals with lesser and more device-specific tasks.

It has been merged in kernel 4.4 https://www.phoronix.com/scan.php?pa...x-4.4-LightNVM

Comment

Comment