Thanks for the reply. It's nice to hear your views.

: )

Depends, in part, on how much more you think CPUs can scale up SIMD width. Intel got badly burned by embracing AVX-512 in 14 nm CPUs, if you look at the horrible clock throttling it triggers. My team just got burned by some AVX-512 code in a library we're using, that caused the clock speed of a 14 nm Xeon to drop down to 1.2 or 1.3 GHz, when its base clock is 2.1 GHz! And because the AVX-512 code only got run for a small fraction of the time, the performance boost it provided wasn't nearly enough to offset the loss in clock speed. Compiling out the AVX-512 code was a pure win, for us. After that, the CPU stayed at or above 2.1 GHz (often boosting to 2.4 GHz) and delivered much more throughput on our workload!

At 10 nm, it supposedly throttles a lot less, and therefore is less of a pitfall. But, that means we shouldn't look for AVX-1024 or a 1024-bit (or 2x 512-bit) implementation of ARM SVE in any CPU with a decent clock speed, any time soon. Fujitsu's 7 nm A64FX didn't even go that wide, and it clocked around 2 GHz, which is actually GPU territory, interestingly enough.

The other question is how much CPU core counts can continue to meaningfully scale. Already, we're starting to see increasing popularity around running them in NUMA mode.

Oh dear. I'd encourage you to spend some quality time looking over the specs of PS4, PS5, XBox One (original and X flavors), and XBox Series X (yeah, MS is unparalleled in their knack for bad/confusing product names). It should make for some interesting reading. You can find a lot of it on wikipedia, if you're allergic to gamer sites.

To cut to the heart of the matter, the only one of these to use bog-standard DDR memory (in this case, DDR3) was the original XBox One. However, it compensated by including a hunk of embedded DRAM for its GPU, as Microsoft has done in prior consoles. All of the rest of these consoles & iterations used GDDR-type memory, and most with a much wider data bus than mainstream desktop PCs employ.

Thanks for the data point. Is that worst-case? What sort of filtering & texture format are you assuming?

Are you talking about AVX2 gather instructions? Do you mean coalescing of the memory operations, I guess?

Sounds fun, though. I recently vectorized some area-sampling code, which was a hoot! ...once I'd finally worked out a good approach (no scatter/gather!).

A long time ago, I learned that jobs usually don't pay enough for this sort of thing. You've got to just do it for the love of the work, sometimes. I like to think of it in terms of craftsmanship.

SMT is more energy-efficient, if not also more area-efficient. The main reason why CPUs don't do more of it is probably lack of concurrency in their workloads, and so much emphasis on single-thread performance.

...because they're designed for server workloads. However, I question whether that's a good move for high core-count CPUs, since integer benchmarks (which benefit most from SMT) seem to show the benefits of Hyperthreading on both Intel and AMD tapering off as core counts continue to rise. And SMT is basically a wash for floating-point performance, on recent CPUs. Maybe not if you're doing lots of random-access, though.

It'll be interesting to see if ARM utilizes SMT in their own server core designs. So far, their server chips seem to be fairly competitive without it, and that's also with a few-years-old micro-architecture (Neoverse N1 is just an amped-up A76 core).

That's dangerous thinking. AFAIK, the CPU can't optimize away those spills (though Zen2 can short-circuit the reload), since I think the CPU can't rule out that it might either be used to communicate with another thread or possibly be referenced later (unless the CPU sees it get overwritten, inside the reorder window?). All of that burns power. Touching cache burns power. Spilling adds contention to the memory port, which can block other operations. No matter how much you can optimize a spill, it's never as good as not having to spill, in the first place.

Huh? I'm sure GPUs use stack, in some form and to some degree.

GPUs use SMT because it's simply a more efficient way of hiding latency than out-of-order, speculation, and prediction. If your workload has enough concurrency, then SMT lets you make simple in-order cores, which you can then scale up in greater numbers. It's a simple formula. Of the big three, Intel is straying from it the most.

Well, GPUs have a lot of leverage over us poor graphics programmers! Because we're addicted to the performance, we have to jump through whatever hoops they place in our way!

I think memory consistency hurts CPUs in the following ways:

GPUs have another advantage, which is the ability to make arbitrary, incompatible changes to their ISA! This means you don't need an internal micro-op format, further shortening pipelines and simplifying their cores.

CPUs could do this, and some have even tried. Probably the latest example is Nvidia's Denver cores, in their X2 SoC.

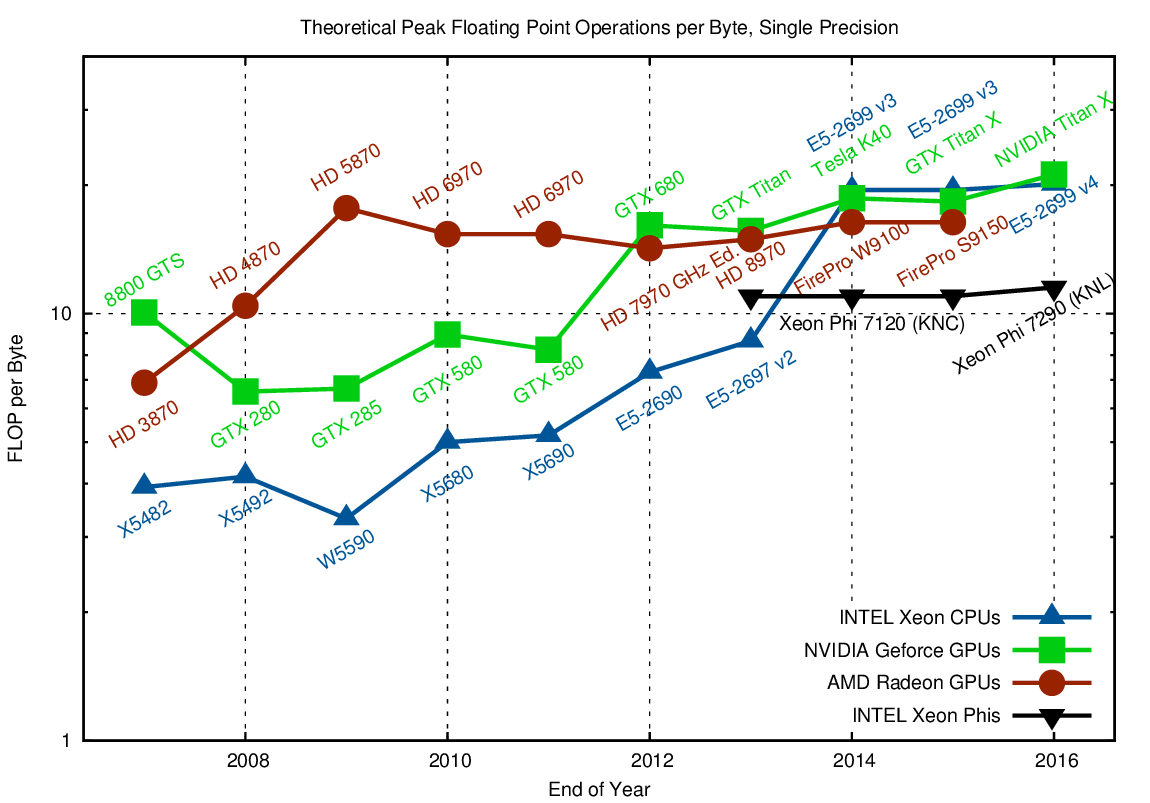

Not sure about that. I need to look for something more current, but here's some food for thought:

He did a nice comparison of GPUs, but that's even more out-of-date:

I'm not. I'm trying to think openly about the benefits and drawbacks of each architectural approach, as well as the best kind of architecture for each class of problem.

Computer architecture has been a casual interest of mine, since I started reading about supercomputers as a kid. That was before anyone coined the term "GPU", but SGIs & OpenGL were all the rage. I got my first job writing graphics code for floating point DSPs connected in a mesh. "SIMD" meant one of them actually broadcast an instruction stream over a special bus, and all of the other DSPs literally ran in lockstep!

: )

Originally posted by c0d1f1ed

View Post

At 10 nm, it supposedly throttles a lot less, and therefore is less of a pitfall. But, that means we shouldn't look for AVX-1024 or a 1024-bit (or 2x 512-bit) implementation of ARM SVE in any CPU with a decent clock speed, any time soon. Fujitsu's 7 nm A64FX didn't even go that wide, and it clocked around 2 GHz, which is actually GPU territory, interestingly enough.

The other question is how much CPU core counts can continue to meaningfully scale. Already, we're starting to see increasing popularity around running them in NUMA mode.

Originally posted by c0d1f1ed

View Post

To cut to the heart of the matter, the only one of these to use bog-standard DDR memory (in this case, DDR3) was the original XBox One. However, it compensated by including a hunk of embedded DRAM for its GPU, as Microsoft has done in prior consoles. All of the rest of these consoles & iterations used GDDR-type memory, and most with a much wider data bus than mainstream desktop PCs employ.

Originally posted by c0d1f1ed

View Post

Originally posted by c0d1f1ed

View Post

Originally posted by c0d1f1ed

View Post

A long time ago, I learned that jobs usually don't pay enough for this sort of thing. You've got to just do it for the love of the work, sometimes. I like to think of it in terms of craftsmanship.

Originally posted by c0d1f1ed

View Post

Originally posted by c0d1f1ed

View Post

It'll be interesting to see if ARM utilizes SMT in their own server core designs. So far, their server chips seem to be fairly competitive without it, and that's also with a few-years-old micro-architecture (Neoverse N1 is just an amped-up A76 core).

Originally posted by c0d1f1ed

View Post

Originally posted by c0d1f1ed

View Post

Originally posted by c0d1f1ed

View Post

Originally posted by c0d1f1ed

View Post

I think memory consistency hurts CPUs in the following ways:

- constrains instruction re-ordering

- forces more memory bus transactions, which has cache-coherency overhead and burns additional power

Originally posted by c0d1f1ed

View Post

CPUs could do this, and some have even tried. Probably the latest example is Nvidia's Denver cores, in their X2 SoC.

Originally posted by c0d1f1ed

View Post

He did a nice comparison of GPUs, but that's even more out-of-date:

Originally posted by c0d1f1ed

View Post

Computer architecture has been a casual interest of mine, since I started reading about supercomputers as a kid. That was before anyone coined the term "GPU", but SGIs & OpenGL were all the rage. I got my first job writing graphics code for floating point DSPs connected in a mesh. "SIMD" meant one of them actually broadcast an instruction stream over a special bus, and all of the other DSPs literally ran in lockstep!

Comment