Originally posted by eydee

View Post

Announcement

Collapse

No announcement yet.

GeForce GTX 1080 Pascal Cards Go Up For Sale Today

Collapse

X

-

Totally agree with blackout23 here, the advantage is absolutely noticeable.Originally posted by atomsymbolI would like to ask about 4K resolutions: Can the human eye recognize some differences in the rendered image in today's most advanced games (irrespective of OS) when comparing 2160p with 4xMSAA 1080p?

But speaking of games it has enormous impact on performance, of course, as is 4xMSAA, btw. Thus, in really modern games, multi- or supersampling is not contemporary anymore. They use temporal AA methods, essentially combining post-processing AA (blurring) with motion blur and maybe MSAA with a small factor (2x?). This techniques are really improving and I am really stunned by the TAA that Crytek used in Ryse, for example. It has close to zero performance impact (~2 FPS for me) and looks great, smoothing out the picture without really blurring too much and lose details. More good examples are Star Wars Battlefront or Uncharted 4. This really helps consoles much when they could not get native 1080p done, so the same applies on upscaling on higher resolutions, obviously.

If the game is not that demanding, you could still use the native resolution or even more with downsampling (basically supersampling), of course.

Gosh, stop spreading FUD, please. 640x480 pixels on 14" is a resolution of 57 pixels per inch (ppi) and that looks really awful.Originally posted by eydee View PostResolution by itself is nothing. Add screen size and *then* it matters. Get a 14" CRT from 1990 and 640x480 looks beautiful. Get a giant flat screen today and you can see the pixels of 1080p from 2 meters. 4k makes a lot of sense, and it makes a big difference, but only if you have a big screen, where you actually need the high pixel density.

Also, obviously you can't really get anything done with this res today...

No, it isn't!Originally posted by atomsymbolThat is true.

Agree mostly on that, but also have to add something.Originally posted by torsionbar28 View PostYeah scaling is faster than native rendering. But it also doesn't gain you anything. A high quality scaler is essentially a 2D version of anti-aliasing. Having more pixels only makes for a better picture if you've got real data to fill them.

It depends on the distance from your eyes to the panel (and your eyes' capabilities of course ) When you have a higher resolution, the gaps between the pixels itself are smaller and that reduces the screen door effect, that you might not have noticed at all on your previous monitor but you will still see the difference with an upgrade. Considering that, a 1080p picture on a 2160p screen might in fact look better than on a 1080p screen with the same pixel density and comparable panel quality.

Last edited by juno; 28 May 2016, 05:44 AM.

) When you have a higher resolution, the gaps between the pixels itself are smaller and that reduces the screen door effect, that you might not have noticed at all on your previous monitor but you will still see the difference with an upgrade. Considering that, a 1080p picture on a 2160p screen might in fact look better than on a 1080p screen with the same pixel density and comparable panel quality.

Last edited by juno; 28 May 2016, 05:44 AM.

Comment

-

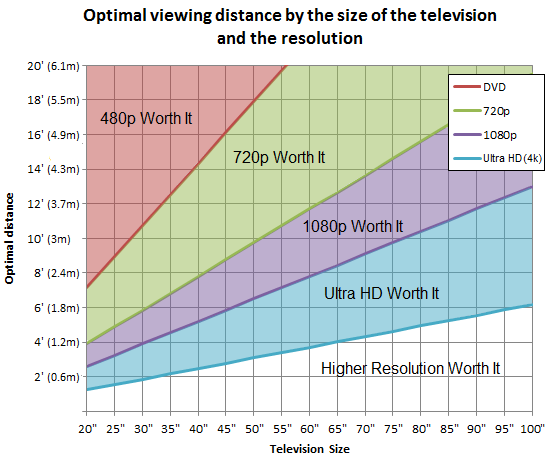

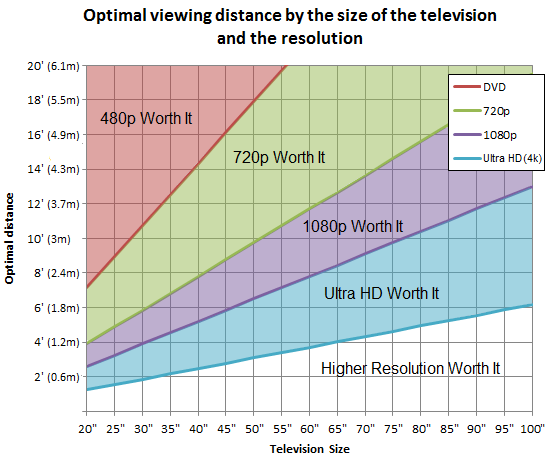

This has been discussed to death in the home theater world and there seems to be general agreement these days on the tradeoffs.

Screen size and viewing distance obviously matter, and the short viewing distances of a typical computer display mean that you can benefit from higher resolution in most cases.

Typical chart:

Last edited by bridgman; 28 May 2016, 07:48 AM.Test signature

Last edited by bridgman; 28 May 2016, 07:48 AM.Test signature

- Likes 3

Comment

-

-

Yes, I do but so what? It has not even remotely have anything to do with "looking beautiful". I'm not hating. I understand the point you made, but this example was just off. If you over exaggerated to make your point clear, I did not get that and I'm sorry, if not I don't really get your reaction now

Comment

-

Huh? Are you saying resolution on CRTs doesn't matter, just because the reconstruction filter is roughly gaussian instead of a 2D boxcar? That's obviously nonsense. Sure, video & properly rendered CG benefits from this, but CG BITD was rendered cheaply and didn't gain much from it.Originally posted by eydee View PostCRT doesn't have fixed pixel size/position and you know that. Stop hating for the sake of hating.

As for Zan Lynx' point, the shadow mask only really becomes an issue as the displayed resolution approaches that of the dot pitch. Otherwise, you can pretty much disregard it. I once had a cheap 21" monitor with a coarse shadow mask. I could see the dots with a monochrome font (esp. green on black), but it generally wasn't an issue as long as the convergence was good.

bridgman's post is on point, and whatever kind of pixel reconstruction you use doesn't much change things. I'd rather view properly mastered 1080p content on a 4k flat panel w/ decent upscaling than a 1080p CRT, any day. And I'm typing this on a CRT (Sony GDM-FW900 clone), so there.

Comment

-

both pixels and pixels per inch are poor measures anyway. What you need is pixels per angle of view. You can get that by dividing pixels by the angular field of view occupied by the screen, or dividing ppi by viewing distance.

bridgman's graph suggests you need approx 4000 pixels per 1 meter of screen, viewed at 1 meter. Go check your setup to see where you stand.

Comment

-

Just placed an order on the GTX 1080 as the card I was told last week I would have from NVIDIA apparently didn't pan out...

Michael Larabel

https://www.michaellarabel.com/

Comment

-

That chart is based around 20/20 vision. If you have 20/10 vision, double the distance, so 8' with a 40" screen would make Ultra HD worth it.Originally posted by bridgman View PostThis has been discussed to death in the home theater world and there seems to be general agreement these days on the tradeoffs.

Screen size and viewing distance obviously matter, and the short viewing distances of a typical computer display mean that you can benefit from higher resolution in most cases.

Typical chart:

Comment

Comment