Originally posted by arQon

View Post

and others who get the video had the same bandwidth problem it takes like forever to get it.

to all this problems AV1 technological has a solution and it is server-side/back-end solution to this because if you make a 8K video in AV1 the server can use this file to send 8K to a customer also it can use the same file to send 5K to customer it can use the same file to send 4K to the customer and it also with the same file can send 2K to the user and also 1K and also 0,5K and also even 480p...

people who get the video could watch it nearly instandly without downloading the 8K data instead they get what their bandwidth can handle maybe only 480p or 2k...

264/265 and so one in this perspective is a failure if you have a 4K file you can only send 4K or you need to re-encode it with a smaller resolution then you get a new file and you can send it... and AV1 does this without re-encode with lower resolution.

"Because roughly 0% of devices can play AV1 efficiently"

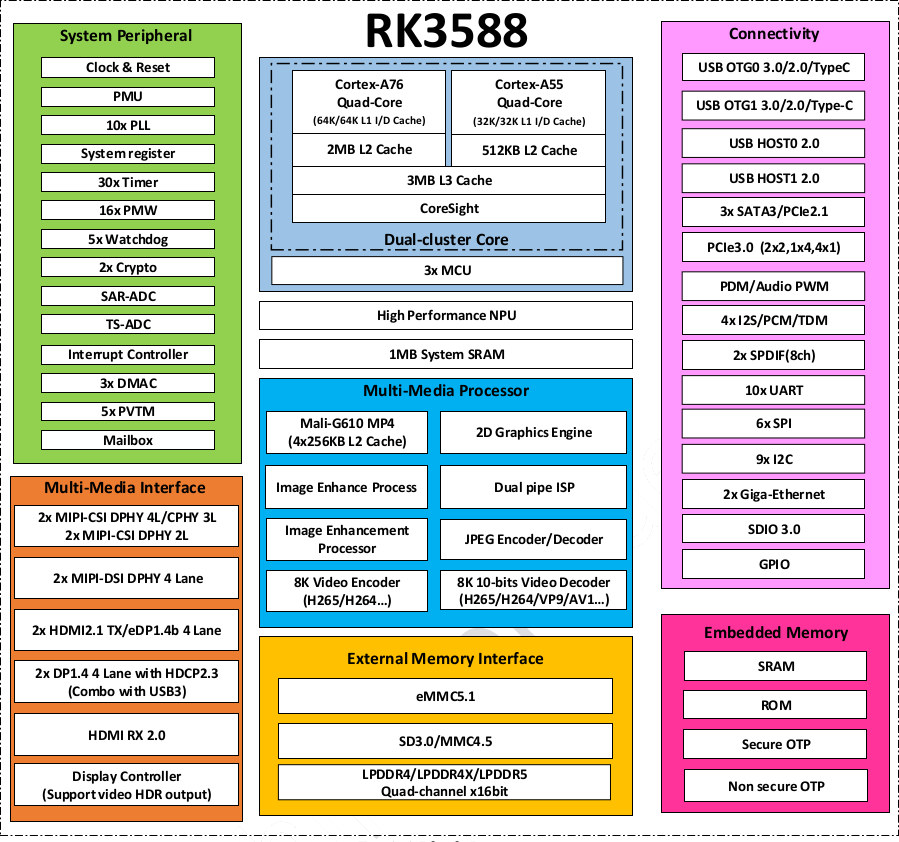

if you have a smart-tv this does not matter at all as long as the ARM SOC is fasth enough to decode it in real-time.

it only need effiency on battery time devices like smartphones. but on these battery time devices 480p AV1 means low resolution mostly gives you the quality you need for these small screens and bigger resolution would not give you higher optical quality because the monitor of these devices is small.

the devices who need efficiency because of battery time are mostly the same devices who has bandwidth problem...

just think in this scenario: one makes video want to stream in real-time to many people...

your 264/265 is not real time steaming instead you make the video then you upload the video another person download the video...

but if you stream it in real time AV1 is superior and why? 1 person has bandwidth problem he automatically gets lower resolution stream from exactly the same source file... another person has high brandwidth and gets higher version out of the same file. and may instead of server-clind architectur you use P2P architectur and maybe your bandwidth hits a limit for your P2P architectur AV1 is your life savior because all the people then automatically gets lower resolution out of the same source and same file.

x264/265 is made in a way that you can stram your video... but AV1 is made in a way that it made for steaming in the first place...

with 264/265 if you want to stream 8k and 5k and 4k and 2k and 1k and 480p then you need 6 files and re-encode it 6 times

with av1 you only need the 8k file... this saves a lot of space on the server to.

companies like google or apple could start to avoid pay patent fees for their smartphones and if someone need x264 they encode in on the server side.

Comment