Originally posted by pinguinpc

View Post

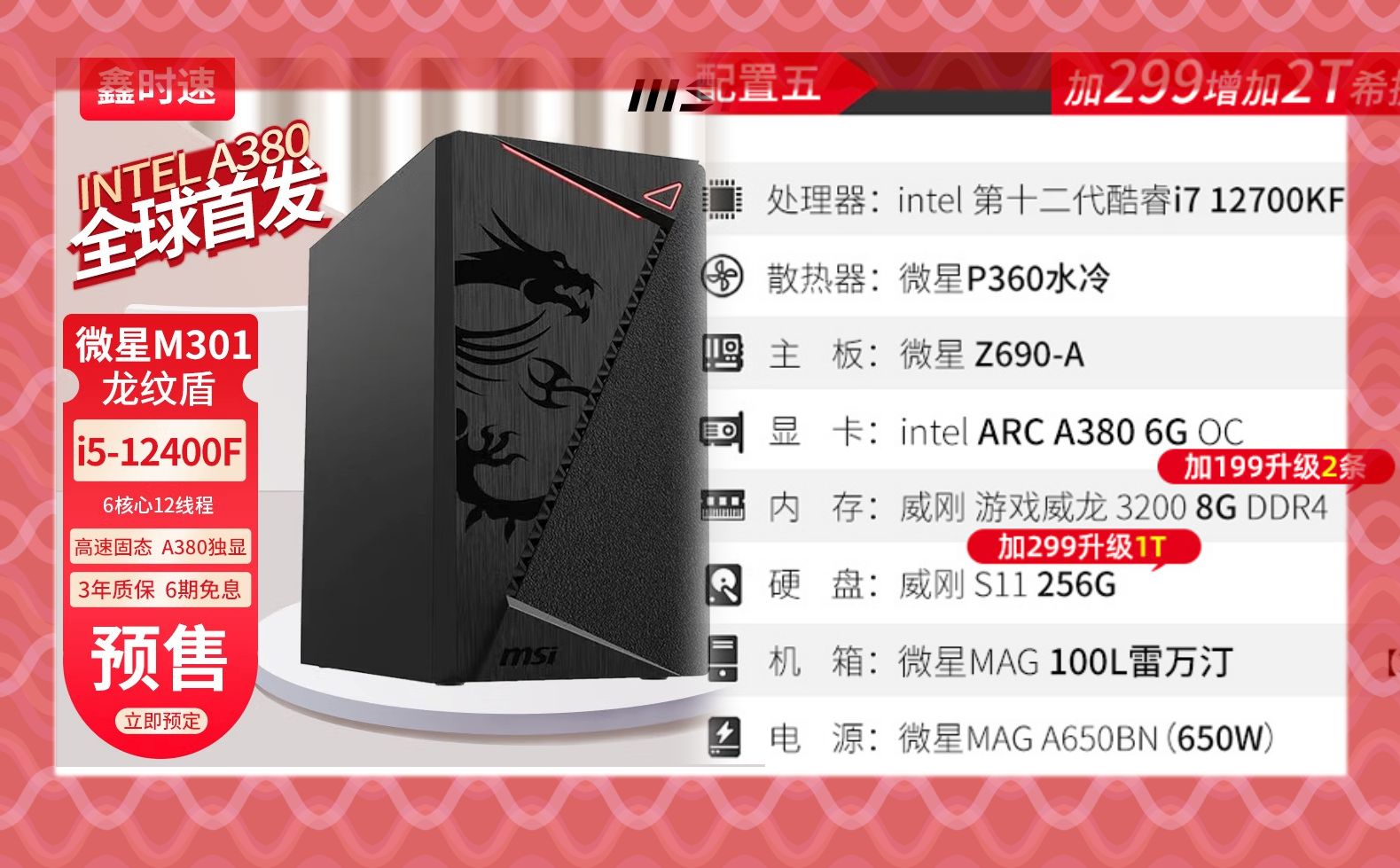

I wanted to buy an RX 6500 XT but then I saw they cut off AV1 decoding. Now my plan is to buy the Arc A380 when available here in Germany.

Comment