Originally posted by atomsymbol

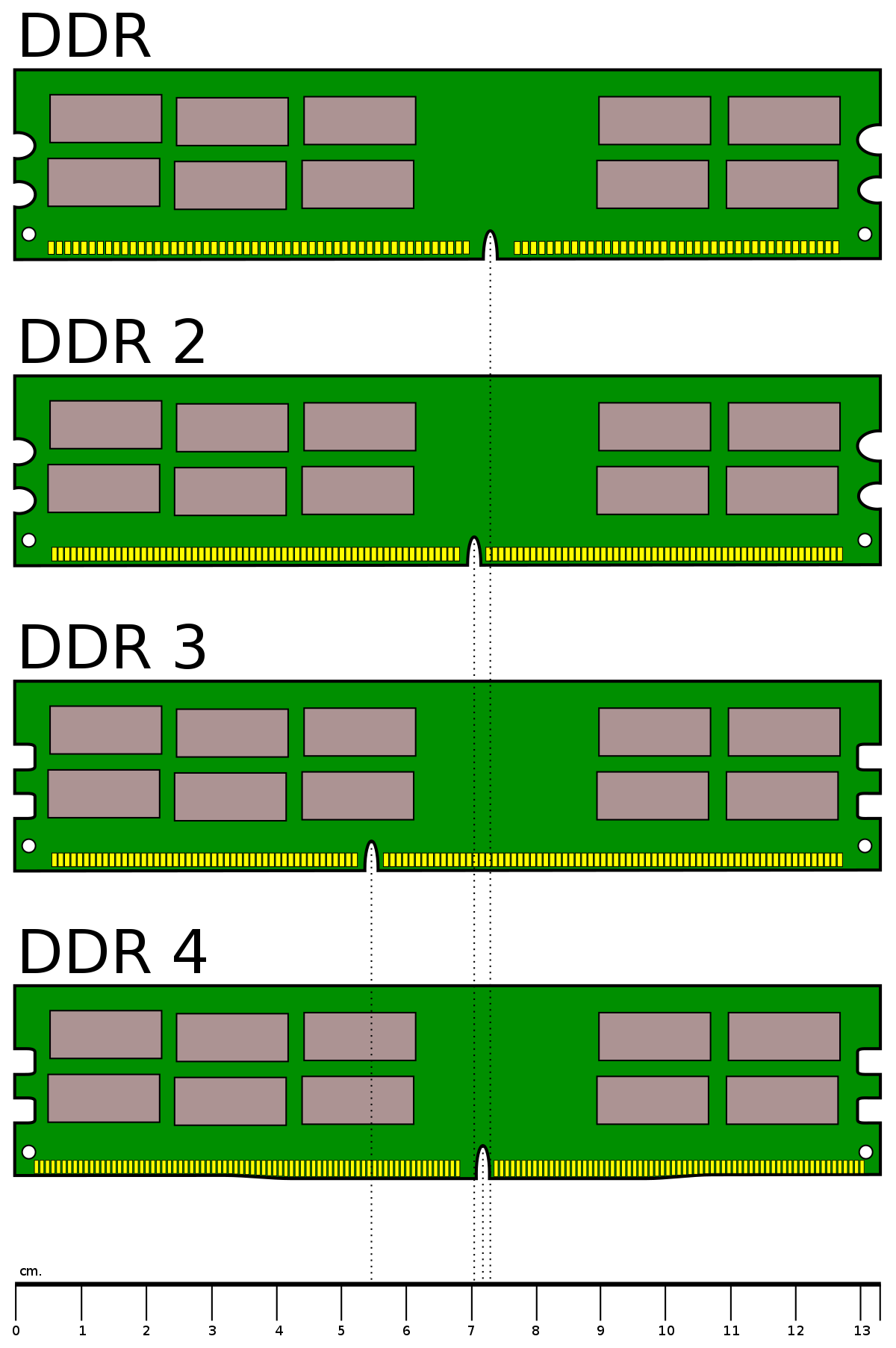

In multi-CPU servers where each CPU only has half or even 1/4 of the total server RAM. It's obvious that the threads running in that CPU will very much like to have their own RAM allocated in the RAM banks of that CPU and not on the RAM of another CPU so it does not need to have to request RAM reads and writes to another CPU through the CPU interconnect bus and can use the memory controller directly.

Comment