Originally posted by coder

View Post

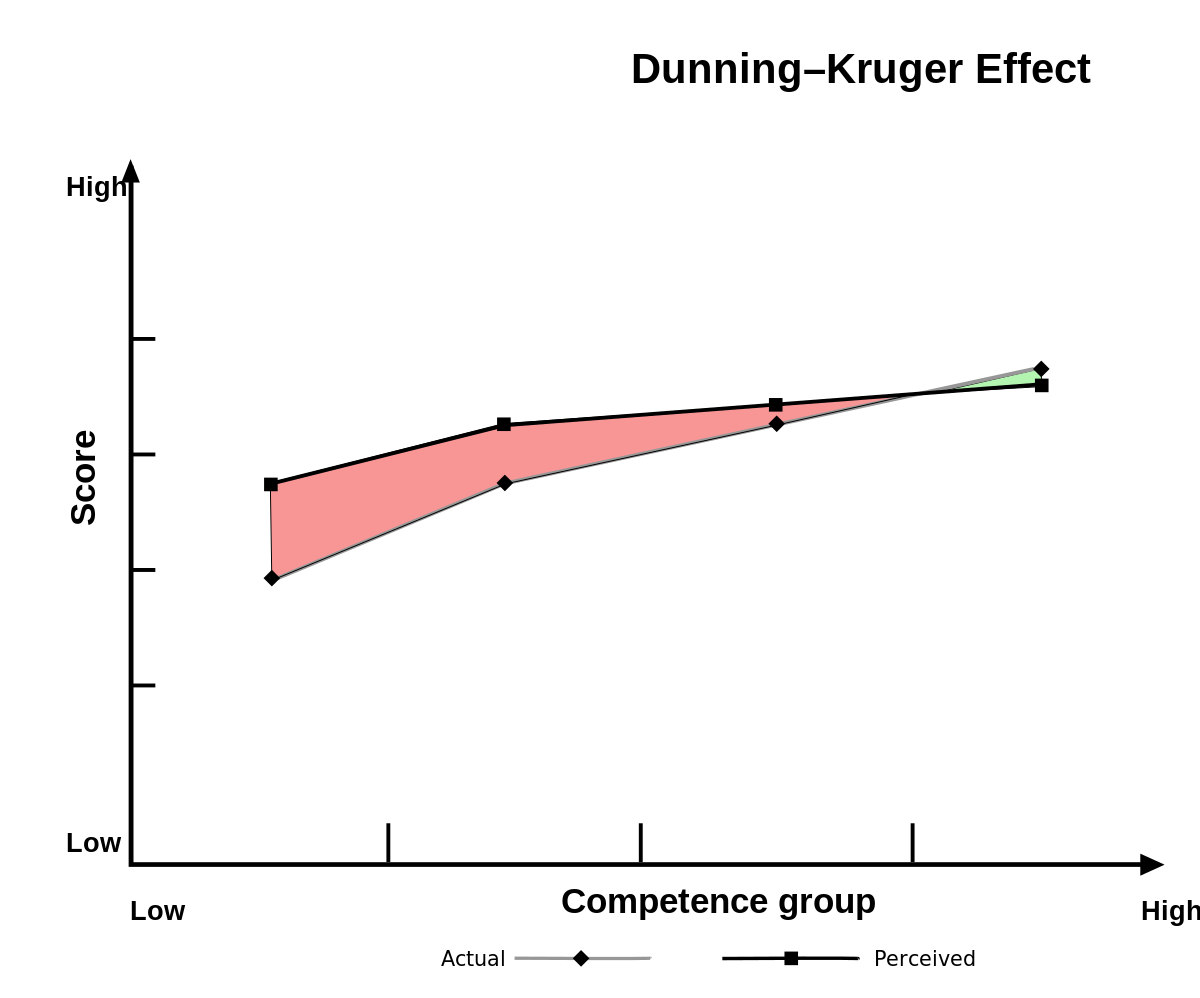

he has complete Dunning Kruger syndrom...

i confronted him with historical facts like 1000 times but he has a complete resistance to it.

its really sad that these kind of people in my point of view do nearly dominate all the internet forums all around the world.

and most of the time the real experts have no time to confront these people with real answers to push real facts.

Comment