Originally posted by Dukenukemx

View Post

Originally posted by Dukenukemx

View Post

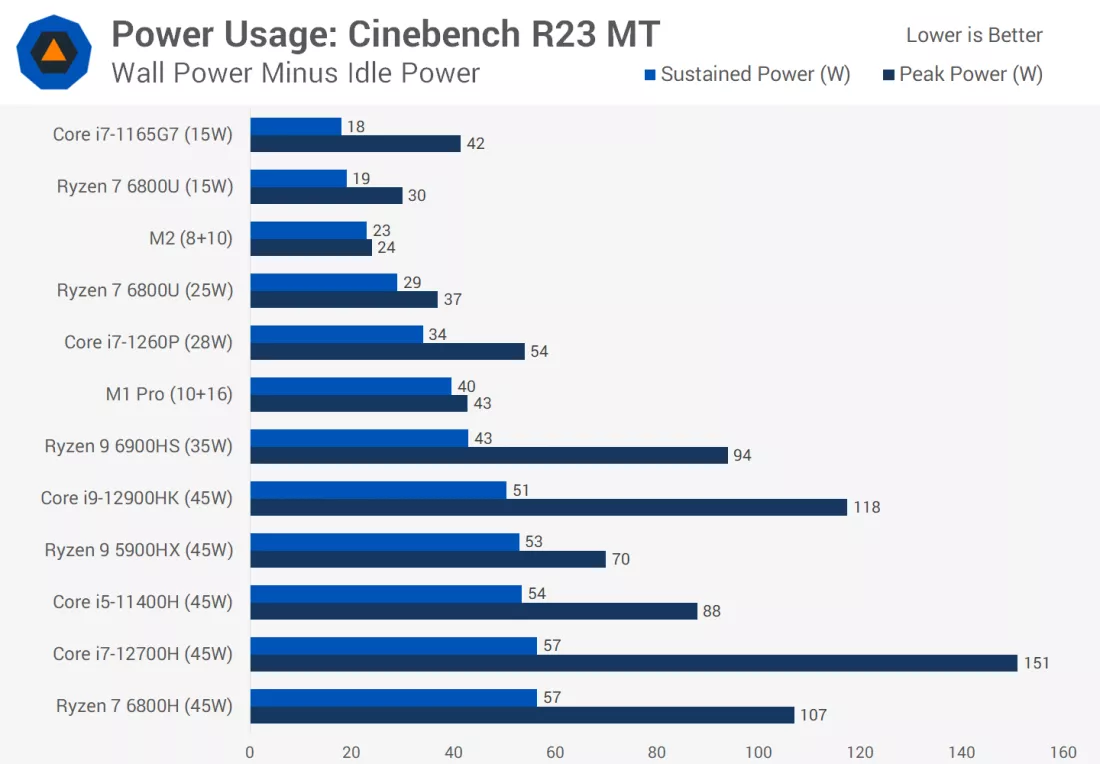

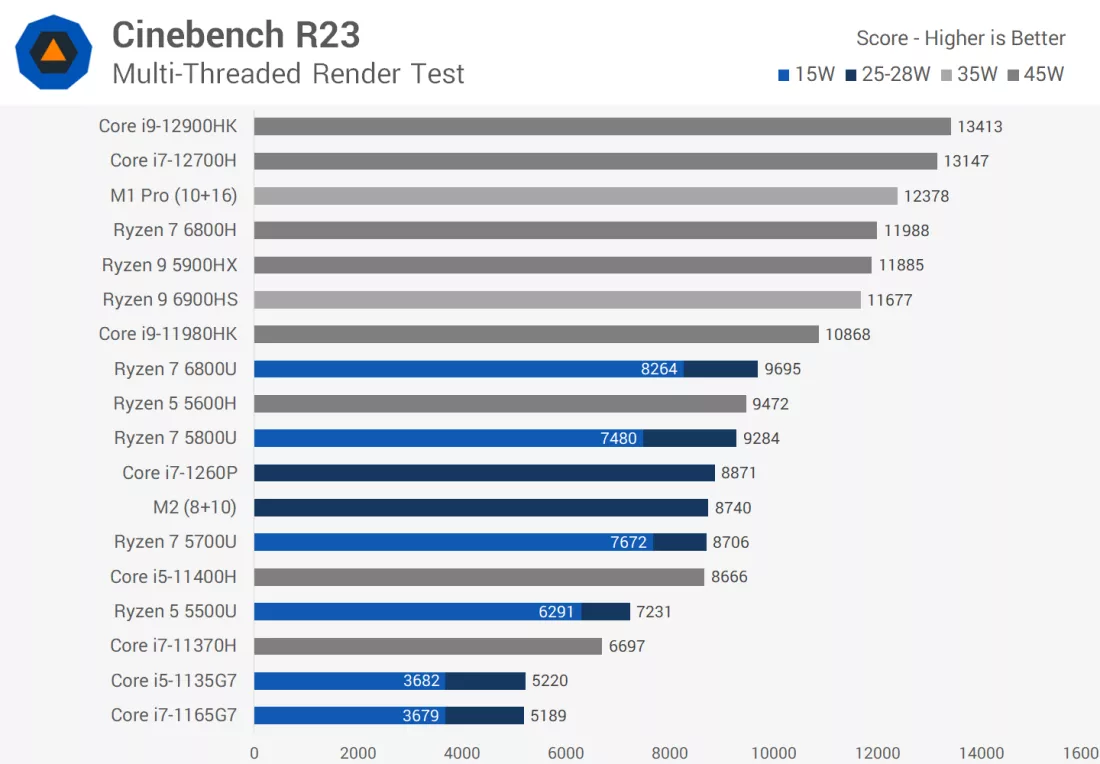

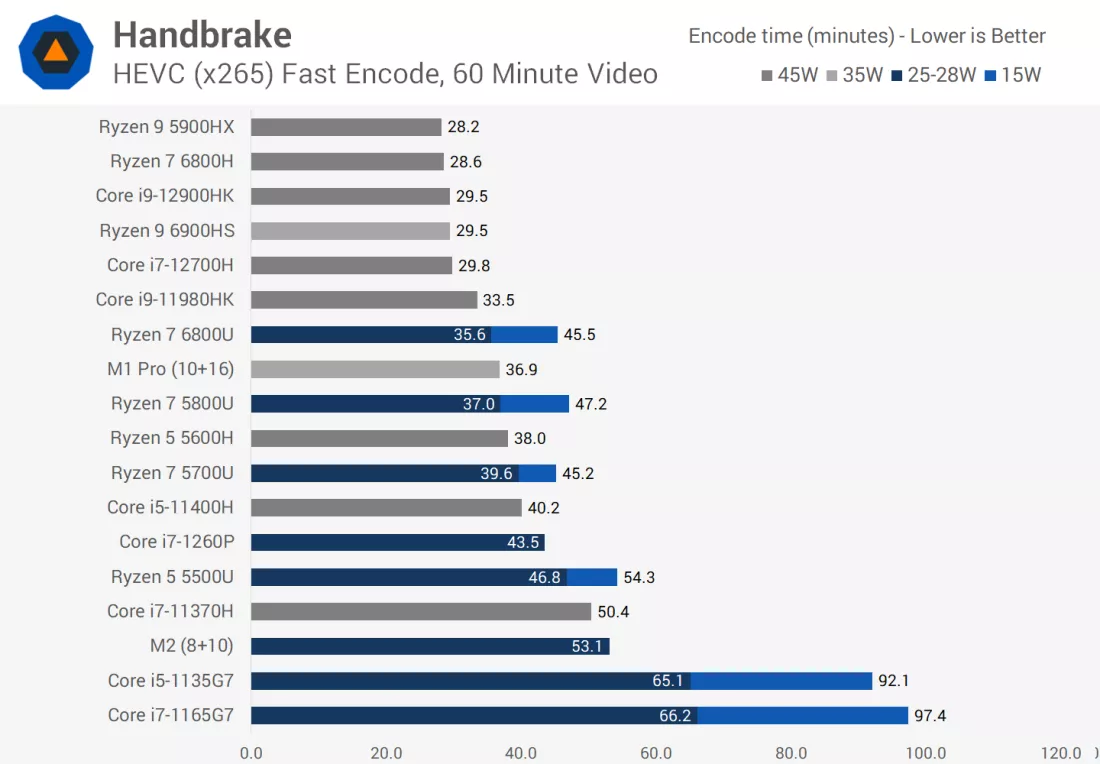

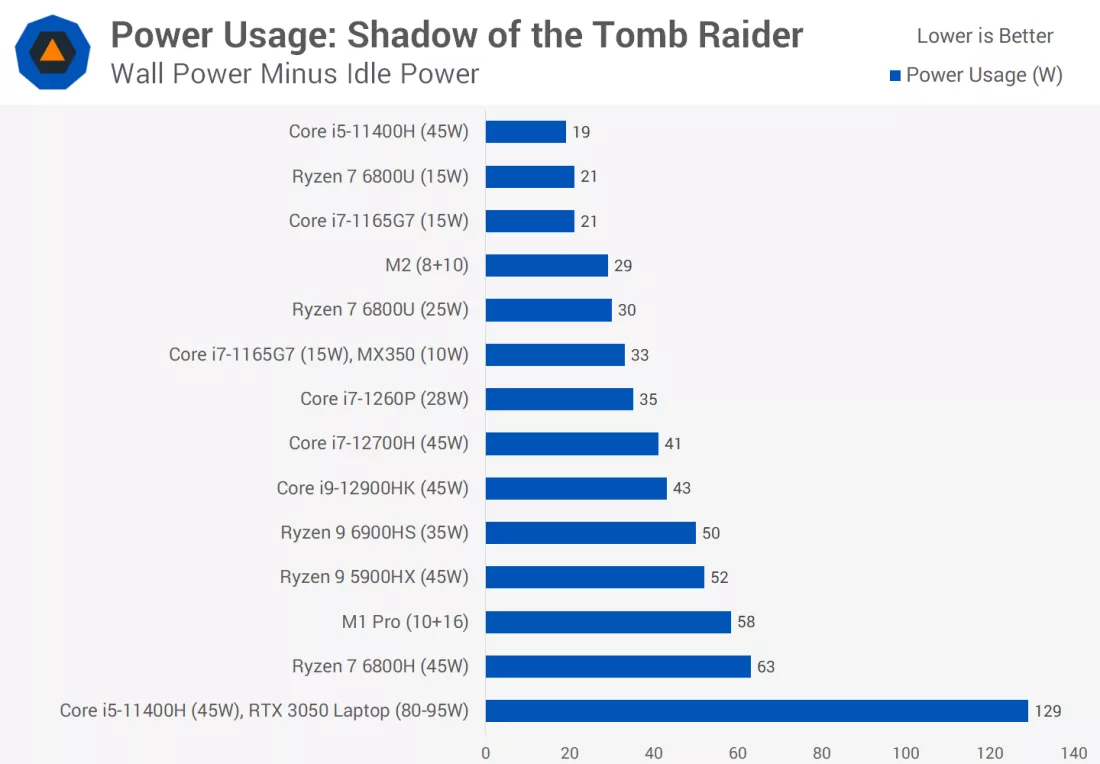

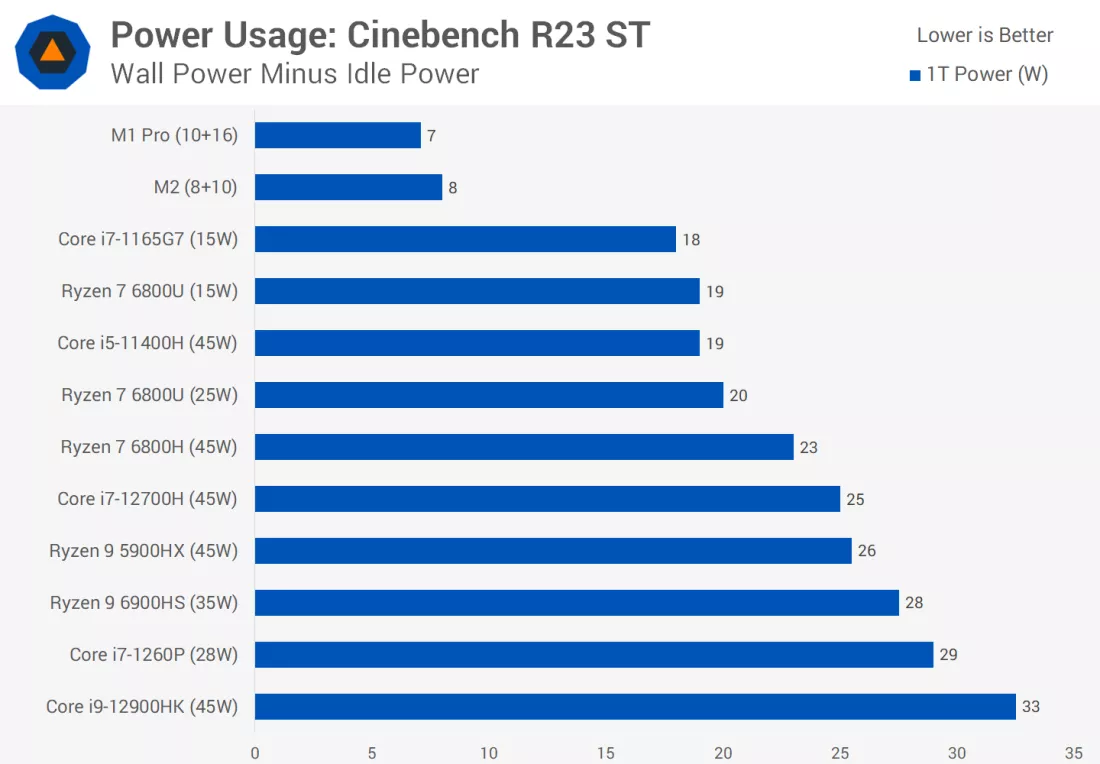

The test there shows an M2 MBP which has a fan and the comparison against 6800U is quite revealing. 6800U configured to 15W TDP consumes about as much power as M2 MBP but the performance lags behind. When boosted to 25W TDP, the performance is mostly on par but it draws much more power, peaking at 37 W. It also seems that an actively cooled M2 can keep running at full throttle for all day long; that's something that x86 chips have not been capable of for years unless you put a heatsink from a nuclear reactor on top of them.

Comment