Originally posted by qarium

View Post

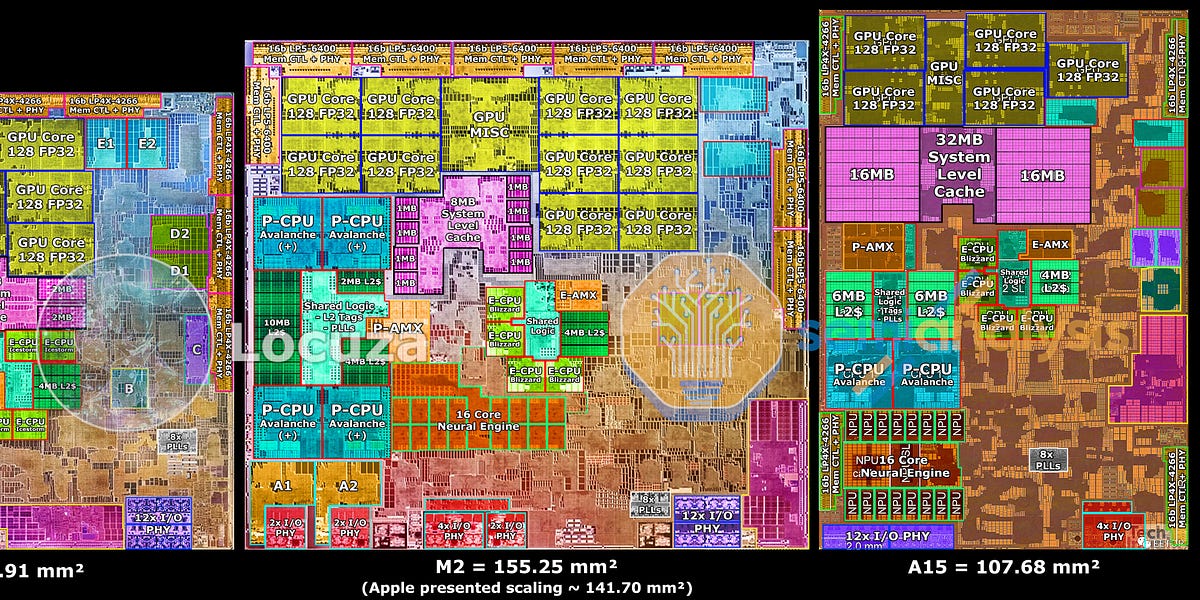

in my opinion the benefit of ARM compared to x86 is the fact that ARM general purpose cpu cores (i do not talk about the ASIC parts here i only talk about the general purpose cpu parts that it saves 5% transistors.

now you think 5% transistors who do consume power ?? then you say there is proof that it does not consume power... right i talk about death transistors who do nothing but they are there for legacy compatibility reasons.

now you think 5% transistors who do consume power ?? then you say there is proof that it does not consume power... right i talk about death transistors who do nothing but they are there for legacy compatibility reasons.

intel or amd could do the same just cut out any MMX and X87 floadingpoint unit and SSE1 and even SSE2 and make it a SSE3/AVX only cpu... it looks like ARM is just faster in droping legacy to spare transistors.

right... but apple did not license AMD GPU tech like intel instead they did License Imagination Technologies powerVR Tile-based Rasterization because this is the one who use less energy compared to all other variants.

yes right a wrapper but it is a simple one compared to (openGL vs directX11)

and your second thought is wrong you claim you can not use metal code for a open-standard.

thats plain and simple wrong because the metal code is the same as in WebGPU.

and your second thought is wrong you claim you can not use metal code for a open-standard.

thats plain and simple wrong because the metal code is the same as in WebGPU.

yes right this sounds right and sane but it is not....

all your babbling is about metal and metal only without the knowelege that apple works on WebGPU to.

how does WebGPU fit into your theory even google and microsoft support it

all your babbling is about metal and metal only without the knowelege that apple works on WebGPU to.

how does WebGPU fit into your theory even google and microsoft support it

all your toxic anti apple babbling about how evil metal is dissolves in the air if you see WebGPU as the future standard for graphic API and gpu compute..

this means apple does not force a walled garden "force developers to make stuff exclusive for them"

instead they force the developers to develop for this best of a future we can get with WebGPU.

and they need to force it to defeat CUDA and OpenCL and OpenGL and DirectX and so one and so one.

WebGPU will be the one standard to defeat all of them.

instead they force the developers to develop for this best of a future we can get with WebGPU.

and they need to force it to defeat CUDA and OpenCL and OpenGL and DirectX and so one and so one.

WebGPU will be the one standard to defeat all of them.

i do not think you lose performance because in WebGPU you translate metal high level language to vulkan SPIR-V ... and from this point on it is exactly the same as vulkan... because vulkan does the same.

Comment