Originally posted by sdack

View Post

Announcement

Collapse

No announcement yet.

Apple Announces The M1 Pro / M1 Max, Asahi Linux Starts Eyeing Their Bring-Up

Collapse

X

-

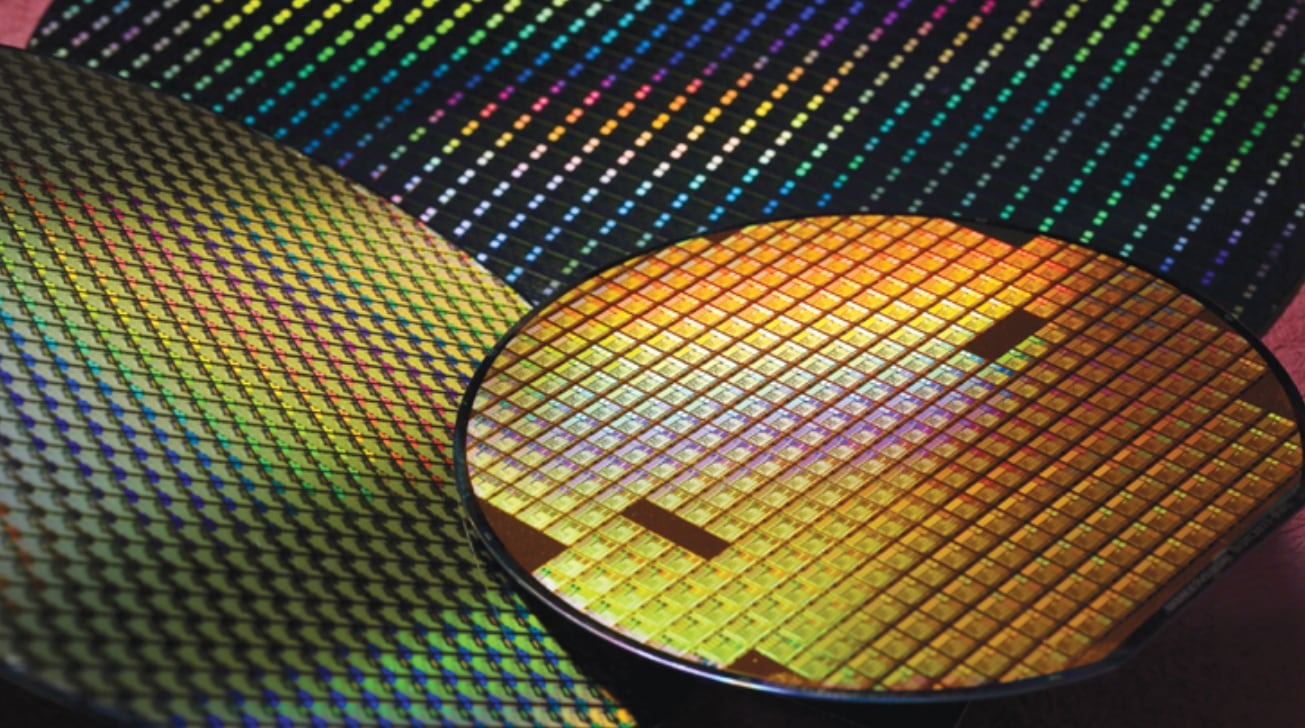

AFX64 is built on 7nm, Apple have already bought up most 3nm production at TSMC.

- Likes 1

-

It is possible that Apple has a lot of redundancy in its chip(in case some of them get damaged), and the architecture is not efficient at utilising resources, so Apple has over engineered it, just to beat the competition. Anyways, they might improve on this in several iterations.Originally posted by sdack View PostIt is actually not quite true that Apple would be the only company. Fujitsu with their A64FX design has also created a "high-performance low-power ARM design". Technically does about any ARM-based chip fall into such a category, each for their respective market, because delivering high-performance at a low-power consumption is the key feature of the ARM design.

Anyhow, I still think Fujitsu has made a better design, going straight for plenty of cores and HBM. Apple creating a monstrous 50b+ transistor chip by packing an assortment of units onto it and connecting it to LPDDR5 just makes me cringe. It is a practical design, but it is also like nothing had been learned from the issues of CISC designs, but we are back at implementing a unit for every occasion even when these never all get used most times and we again end up with unused potential. It makes me wonder what happens when one were to run an application that did make use of all units. Will the chip run too hot, will it throttle down, will it expose bottlenecks in the design? ...

Leave a comment:

-

I never complained about anything. Your entire post repeats what I said - with a bit more technical jargon thrown in. Nothing new, with no point as well. I know what Apple has made. I also understand that it has a well tailored use case. There are many computing needs that require more than one processor and certainly more than one USB port. Apple is not making anything for them. Hence, Intel/AMD etc will keep serving their ever growing consumer market and giant library of existing software for the next decade. I'd say Fujitsu is doing something substantially innovative in ARM world.Originally posted by coder View Post"Judge not, lest ye be judged."

What Apple built is an APU. It's functionally equivalent to mainstream Intel CPUs, AMD APUs, and console chips. If you think it's wrong to put the GPU & video blocks on the same die as the CPU cores, then you should level the same complaints at all of them.

SOCs cannot be scaled beyond a certain point by physical limitations, and the computing power in M1 is impressive, but it is not mind boggling huge. As far as NVME failing, the RAM can fail as well and it cannot be replaced. Besides, Apple has made it impossible for anybody to swap out the NVME with a blank one, if I read a few repair blog posts right. Not the kind of product to rely upon if it fails.

I really appreciate having modular parts, with different manufacturers, and individual warranty, that come blank as slate and are not tied so some company with a hard coded serial ID. Traditional desktop computing is not going anywhere.

Leave a comment:

-

and pigs might fly, but usually they don't. Sure, you can run the same code, but usually you have a Ryzen 7 5800X based gaming desktop and a M1 based MacBook Pro. You won't take your gaming desktop on the go and you won't run it on a battery cell pack. You won't turn it into a energy efficient server and the same holds true for your MacBook Pro, though for different reasons.Originally posted by coder View PostIf the code you run on them is the same

When you play a game for two hours on your gaming PC, you don't give a fsck how much power it is drawing unless that becomes a heating problem. The 0.42 cents difference on the electricity bill is negligible when compared to the money you spent to buy the gaming desktop and the videogames (or even the MacBookPro to that extent).

Yes that's true, no doubts, but the same progression can be observed in a more useful way by comparing the M1 to some AMD/Intel/whatever MOBILE counterpart.Originally posted by coder View Poststill interesting to see the progression of their sophistication, if purely from a technical perspective.

Please wake me up when you will be actually saving those bucks because you will be actually using your MacBookPro to replace those two servers, thanks.Originally posted by tildearrow View Post

Currently (two machines, one is a dinosaur desktop turned into server and another is a more powerful server): ~300Wh = 7200W/day = 216kW/month = $37.80/month

M1: ~15Wh = 360W/day = 10.8kW/month = $1.89/month

I would save $35.91 per month...

Leave a comment:

-

This has always been the part I don't get, yes Apple design CPU's well. But a decent proportion of their advantage comes from the amount of silicon they use, and that the use the latest nodes. That let's them make fatter, slower clocked cores with a higher IPC to get power savings, but if competitors used the same transistor count at the same process node, most of the advantage would probably not stick.Originally posted by smitty3268 View Post

57 billion transistors. That's a huge chip.

Some quick math puts the CPU side a bit over 10bn transistors, which lines up very closely with a 5950X CPU.

The GPU is then ~47bn, which is a lot higher than even the high end discrete AMD/NVidia GPUs, which I believe are around 27-28bn transistors. Apple is probably running their hardware at significantly lower clockspeeds to get lower power usage.

A Ryzen 58XX/59XX mobile APUs 8C/16T with caches, IO and 8 CU GPU totals 10.7b transistors while the M1 Pro is 37b and M1 Max is 57b. AMD could literally triple the core count, GPU size and still have a smaller chip.

Obviously Apple has a lead, but a lot of that is $$ spent on silicon area, as the Price vs Performance vs Area tradeoffs for XPU design Apple has just said fuck-it to the Area to win the rest and absorb the higher cost of the M1 into the products price. As a consumer buying the products, that's great, but I don't think the advantage will last ongoing, especially as they still have a monolithic chip design.

- Likes 2

Leave a comment:

-

Fujitsu's CPU is purpose-built for HPC, while Apple is making highly-integrated solutions for tablets, laptops, and desktop computers that need substantial graphics horsepower. They're solving different problems and optimizing different things.Originally posted by sdack View PostAnyhow, I still think Fujitsu has made a better design, going straight for plenty of cores and HBM. Apple creating a monstrous 50b+ transistor chip by packing an assortment of units onto it and connecting it to LPDDR5 just makes me cringe.

The reason mobile SoCs tend to have dedicated NPUs (neural processing units) and ISPs (image signal processors) is that they're more power-efficient at those tasks than either the CPU or GPU. And when you're selling a premium product, it's worth burning a little more die space to get longer battery life. Remember, Siri is always listening!Originally posted by sdack View Postwe are back at implementing a unit for every occasion even when these never all get used most times

These days, CPUs tend to have both power limits and thermal limits. If your cooling is adequate, you're going to throttle for power utilization before you hit the thermal ceiling. Some tablets and thin laptops will have inadequate cooling, however.Originally posted by sdack View PostIt makes me wonder what happens when one were to run an application that did make use of all units. Will the chip run too hot, will it throttle down, will it expose bottlenecks in the design? ...

- Likes 1

Leave a comment:

-

I was referring to the part where you said "The M1, apple's first effort, ...", implying you thought the M1 contained the first ARM core Apple designed. My comments were pretty clearly addressing that aspect of your statement, but whatever.Originally posted by Developer12 View PostI don't have to "think" it happened. It's a known part of the M1 microarchitecture.

- Likes 1

Leave a comment:

-

It is actually not quite true that Apple would be the only company. Fujitsu with their A64FX design has also created a "high-performance low-power ARM design". Technically does about any ARM-based chip fall into such a category, each for their respective market, because delivering high-performance at a low-power consumption is the key feature of the ARM design.Originally posted by Slartifartblast View PostTheir buying power stops others from using advanced nodes at TSMC.

Anyhow, I still think Fujitsu has made a better design, going straight for plenty of cores and HBM. Apple creating a monstrous 50b+ transistor chip by packing an assortment of units onto it and connecting it to LPDDR5 just makes me cringe. It is a practical design, but it is also like nothing had been learned from the issues of CISC designs, but we are back at implementing a unit for every occasion even when these never all get used most times and we again end up with unused potential. It makes me wonder what happens when one were to run an application that did make use of all units. Will the chip run too hot, will it throttle down, will it expose bottlenecks in the design? ...

- Likes 1

Leave a comment:

-

Their buying power stops others from using advanced nodes at TSMC.Originally posted by tildearrow View PostIt's very sad that Apple is the only company with a high-performance low-power ARM design.

- Likes 1

Leave a comment:

-

Apple users have never been much concerned with the details. They have accepted that they have to pay a high price for something they do not want to understand. It is not very surprising when Apple then produces pompous SoCs. Apple users will pay for it and not have an opinion about it. However, to see that it has a dedicated AI unit makes me wonder how often the unit will be used for real-world tasks, how often the user is aware of it, and when it is perhaps used to gather data about the user.Originally posted by nranger View PostThat's a very good point. Apple supports older iPhones with updates longer, largely because their 30% cut of app-store revenue makes it profitable to do so. It makes sense to make the "ownership marketing" experience consistent across all their products. The more their walled garden keeps consumers in the ecosystem, the more opportunity for Apple to make money selling them a watch, a display, an iPad, a copy of Final Cut, etc, etc.

Leave a comment:

Leave a comment: