Originally posted by starshipeleven

View Post

Announcement

Collapse

No announcement yet.

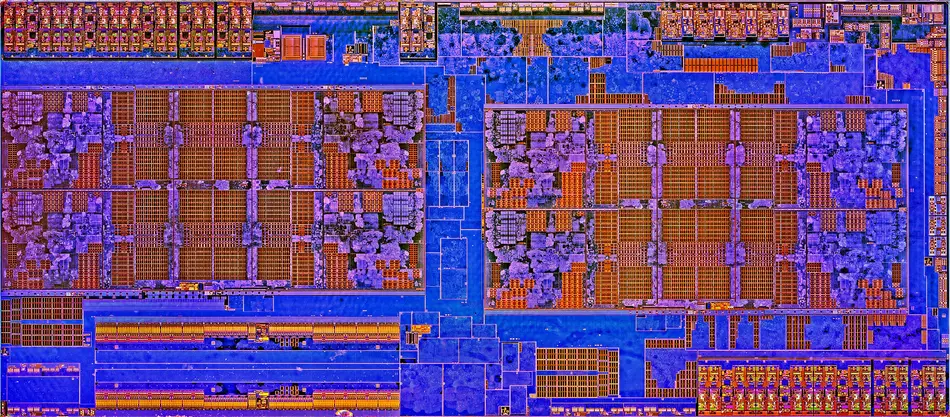

AMD Announces EPYC 7532 + EPYC 7662 As Newest Rome Processors

Collapse

X

-

Seen like this, even now with 256MB most of the OS stays in the cache. I could see some benefit for bit math...

-

afaik for Zen the Cache is in the CPU chiplet https://www.gearprimer.com/wiki/ccx/Originally posted by tchiwam View PostCould they make a cpu with 4 ccx and 4 cache chiplets ?

But in the future they may split the cache into its own chiplet, if it keeps increasing like this there isn't much choice.

Leave a comment:

-

fixed.Originally posted by skeevy420 View Post

That's literally my plan -- Wait for companies to start selling off their workstations and servers when they upgrade in 4-5 years.

Leave a comment:

-

That's literally my plan -- Wait for sys-admins to start selling off their workstations and servers when they upgrade in a couple of years.Originally posted by starshipeleven View PostAnd yeah, they will be selling these things left and right, people on servethehome for example all have massive hard-ons about these processors, so expect to get stupid cheap 64-core CPUs in a few years while high end ones get a few hundreds.

Leave a comment:

-

Hopefully, there will also be a 7662P as a "reasonably" priced alternative for people who are discontent with the TR 3990X effective 256 GB memory limit.

I think there will be on-package HBM at some point, 16 GB or more should be no problem..Originally posted by MrEcho View PostI would love to see a Ryzen with 1GB of L3/L4, they have enough room on the socket todo so. With this chiplet design, it opens up options.

Leave a comment:

-

True but this is implied by higher density - if the die size is more or less in the same order of magnitude whilst increasing the density several magnitudes over the decades will cause smaller structures hence smaller chargecarrier density in the tracks. Therefore a charged incident particle will induce enough voltage to be able to switch a state.Originally posted by starshipeleven View PostUmmmmmmm.... I think it was more that modern stuff is very miniaturized so charged particles have an easier time changing component state or punching holes, while affecting larger, heavier and dumber components requires more energy because they are simply bigger.

Older chips are actually very much larger in surface space (at the same relative performance), they are printed at 80nm or even bigger process nodes.

For punching holes you need to have the right kinetic speed and charge state otherwise there will be not enough ion-matter-interaction to dump the thermal energy for a hole (bragg-peak). This is only valid for charged ions but it is also the only particle which is "strong" enough and available in high quantity up there. But yes this is also a critical mechanism which has to be considered for space electronics....good old tubes

Thanks for the inputOriginally posted by starshipeleven View PostYeah. Cache is usually designed as a modular bunch of smaller cells that can be disabled independently so you can minimize the amount of cache lost for every defect.

Otherwise having a single defect in the cache block would mean you have to disable the whole block so you end with some cores that have no attached L1/L2/L3 cache, that's insane, you are trashing that core's performance.Last edited by CochainComplex; 20 February 2020, 07:54 AM.

- Likes 1

Leave a comment:

-

Economies of scale are still a thing. If you sell enough 64-core CPUs you can really drop prices dramatically, and then your 64-core CPUs become so cheaper than the weaker CPUs you might want to mount on devices that don't need them. This is a completely different strategy than Intel's "milk the suckers for as long as possible lololololol" master plan.Originally posted by phoenix_rizzen View PostNot everything needs a 64-core CPU.

And yeah, they will be selling these things left and right, people on servethehome for example all have massive hard-ons about these processors, so expect to get stupid cheap 64-core CPUs in a few years while high end ones get a few hundreds.Last edited by starshipeleven; 19 February 2020, 06:39 PM.

Leave a comment:

-

Wat? Yes it has. Frequency is lowered as core count increases, why do you think they do that.Originally posted by wizard69 View PostThe bigger issue is that disabling cores isn’t a huge impact on overall thermals.

With chiplets it's pretty easy to deal with defects as you can just pick and match all defective ones to make the 32 core variant, and take all the good ones to make the 64 core one, with only the very badly defective ones that are discarded. Afaik they also have a "chipsetlet" aka a dedicated chiplet for pcie and other high speed controllers so the other chiplets are mostly CPU cores and caches, further limiting the waste in case they need to throw away a chiplet.Disabling cores due to defects is another thing but AMD doesn’t seem to offer a lot of variants due to defective cores. It is probably easier for them to simply sell the defective chiplet as a consumer part than to muddy the Rome lineup. It would be very interesting to know what is actually happening but I get the feeling that yields are very good.

Leave a comment:

-

Not every server needs a bunch of CPU resources, but it might need lots of RAM and/or lots of PCIe lanes.Originally posted by wizard69 View Post

There is no incentive to hold back. The cores in these processors are not as power hungry as some might imagine. Caches on the otherhand are very power hungry and as such are limited in size to keep power in check.

so I’m wondering why do you want fewer cores, it makes no sense to me.

For instance, a ZFS-based storage server that's exporting volumes via iSCSI doesn't need a lot of CPU cores, but it does need a lot of PCIe lanes for disk controllers, and possibly RAM for cache. The 8- and 16-core versions of the EPYC CPU are perfect for this (and if they come out with a 16-core Threadripper 3000, that could work as well).

Same for an NFS server.

A server that does a bunch of number crunching on GPUs would need a lot of PCIe lanes and possibly RAM, but doesn't need a lot of CPU cores to manage the data flow.

Not everything needs a 64-core CPU.

- Likes 2

Leave a comment:

Leave a comment: