To be honest performance per dollar isn't so bad for Intel, it's worse, but not by great lengths. If it was my wallet, I'd go with ADM, but if it's my company's wallet, I'd go with what's certified for what my company need.

Announcement

Collapse

No announcement yet.

Ubuntu 20.04 + Linux 5.5: Fresh Benchmarks Of AMD EPYC Rome vs. Intel Xeon Cascade Lake

Collapse

X

-

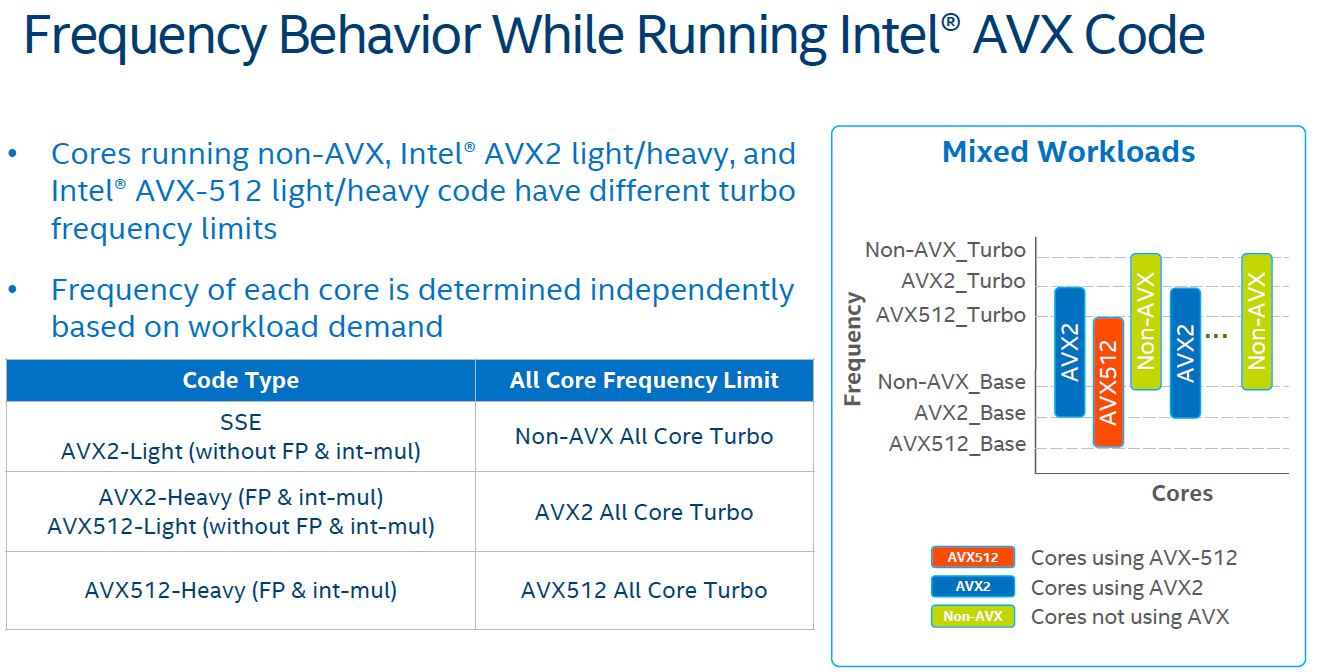

Even the AVX-512 is not attractive to me anymore, after I found the Intel official document stating the AVX-512 part may run at lower frequency than the BASE frequency.Originally posted by Paradigm Shifter View PostThe only reason I even look at Intel is AVX-512. But that is a very specific use case, and to be honest AVX2 Threadripper isn't that much slower, particularly with newer GCC...

...

Sorry, I'm having a brain fail... when doing the performace/dollar comparisons, is it CPU cost only, or also factoring in platform cost (motherboard, maybe RAM)? I imagine for the higher-end chips it really won't make much difference, but for the lower-end ones?

This is hilarious.

- Likes 2

Comment

-

This is known for some years now. With full AVX-512 workload clock can drop down to 1.2GHz . But that's ok since the overall throughput you get is still much better than AVX2 only. Problem is I've seen this with only very few special and highly tuned codes. Your average off-the-shelf or home-grown code rarely touches AVX-512. And if you have a use case for it, it's almost always best to use it through Intel's math libs.

Comment

-

This! Is in my opinion everything that is wrong with the IT industry on the enterprise side. Its why a new player can never break into the market. Its why people still use Citrix XEN when XPC-NG is vastly superior. Its why HP and Dell etc have shitty licencing costs for iDRAC iLO and firmware updates yet people still buy them over gigabite or asrock.Originally posted by Buntolo View Postif it's my company's wallet, I'd go with what's certified for what my company need.

Its almost as if the current players trick ill informed upper managers and directors into thinking they need certifications like this to maintain the status quo and there fat $$$ markups. No certification is any substitute whatsoever for testing your workload properly yourself!

Having a bunch of nerdy people like us understand the workload and how the system works and actually test it is way better than buying an ancient Xeon as that whats was "certified" 10 years ago when the monolith of propitiatory spaghetti code was written.

Not having a go at you mate , just venting at the state of the industry that has been taken over by meetings and contracts and beancounters.

- Likes 3

Comment

-

You are aware that this is the case even for AVX2 on Intel, right? Only quite recently they decoupled AVX clocks from other cores since the entire CPU used to slow down and not just cores.Originally posted by zxy_thf View PostEven the AVX-512 is not attractive to me anymore, after I found the Intel official document stating the AVX-512 part may run at lower frequency than the BASE frequency.

This is hilarious.

(from servethehome.com)

- Likes 1

Comment

-

Can I make a suggestion for reviewing Performance per Dollar?

We should be looking at total system cost, not just raw CPU cost...or at the very least show both and give a few options for the price of the rest of the machine.

You've paid into a system build for a lot aside from the CPU. Probably spent $3K on RAM, $1K on the chassis and PSU, $1K on the motherboard, $2K on drives, maybe upwards of $8K on GPUs, and then throw in another $5-10K for software licensing.

When you factor in that it costs you $15K just to have something you can drop that CPU into, it really changes the P/$ a whole lot.

Suddenly a $5K CPU only has to be 25% faster than a $1K CPU in order to be tied for P/$...because you're not comparing a $5K CPU to a $1K CPU, you're comparing a $20,000 system to a $16,000 system, which is only a 25% increase in cost.

So I'd really love to see that factored into these kinds of reviews. Show us the P/$ graph for just the CPUs, then show us the P/$ graph when those CPUs are dropped into a $5,000 system, $10,000 system, $15,000 system, $20,000 system.

But aside from that, thanks for the thorough and awesome article!

Comment

-

Sorry just wanted to add to my previous post here with an example using the VRay benchmark.

EPYC 7642: 51323

EPYC 7502: 35965

EPYC 7402: 32080

EPYC 7302: 22335

XEON 4216: 17061

XEON 5218: 17034

XEON 8253: 15092

Then let's figure a baseline pre-CPU cost for the system and licenses @ $10,000, and we'll add the cost of each CPU to that before looking at PPD

EPYC 7642: 3.35ppd

EPYC 7502: 2.78ppd

EPYC 7402: 2.67ppd

EPYC 7302: 2.01ppd

XEON 4216: 1.54ppd

XEON 5218: 1.49ppd

XEON 8253: 1.078ppd

That paints a WAY more accurate picture of the actual performance per dollar of these CPUs...and in fact I'd say you wouldn't ever want to judge it any other way.

- Likes 1

Comment

-

Often management don't even want to upgrade, since they see it as a tax, an extra-expense, especially if it's just to be on par and not to expand.Originally posted by andrewjoy View Post

This! Is in my opinion everything that is wrong with the IT industry on the enterprise side. Its why a new player can never break into the market. Its why people still use Citrix XEN when XPC-NG is vastly superior. Its why HP and Dell etc have shitty licencing costs for iDRAC iLO and firmware updates yet people still buy them over gigabite or asrock.

Its almost as if the current players trick ill informed upper managers and directors into thinking they need certifications like this to maintain the status quo and there fat $$$ markups. No certification is any substitute whatsoever for testing your workload properly yourself!

Having a bunch of nerdy people like us understand the workload and how the system works and actually test it is way better than buying an ancient Xeon as that whats was "certified" 10 years ago when the monolith of propitiatory spaghetti code was written.

Not having a go at you mate , just venting at the state of the industry that has been taken over by meetings and contracts and beancounters.

Comment

Comment