Originally posted by F.Ultra

View Post

Who says you can't hook it up to 4K monitor or TV and watch a movie on it ?

I honestly don't buy GPUs just for games.

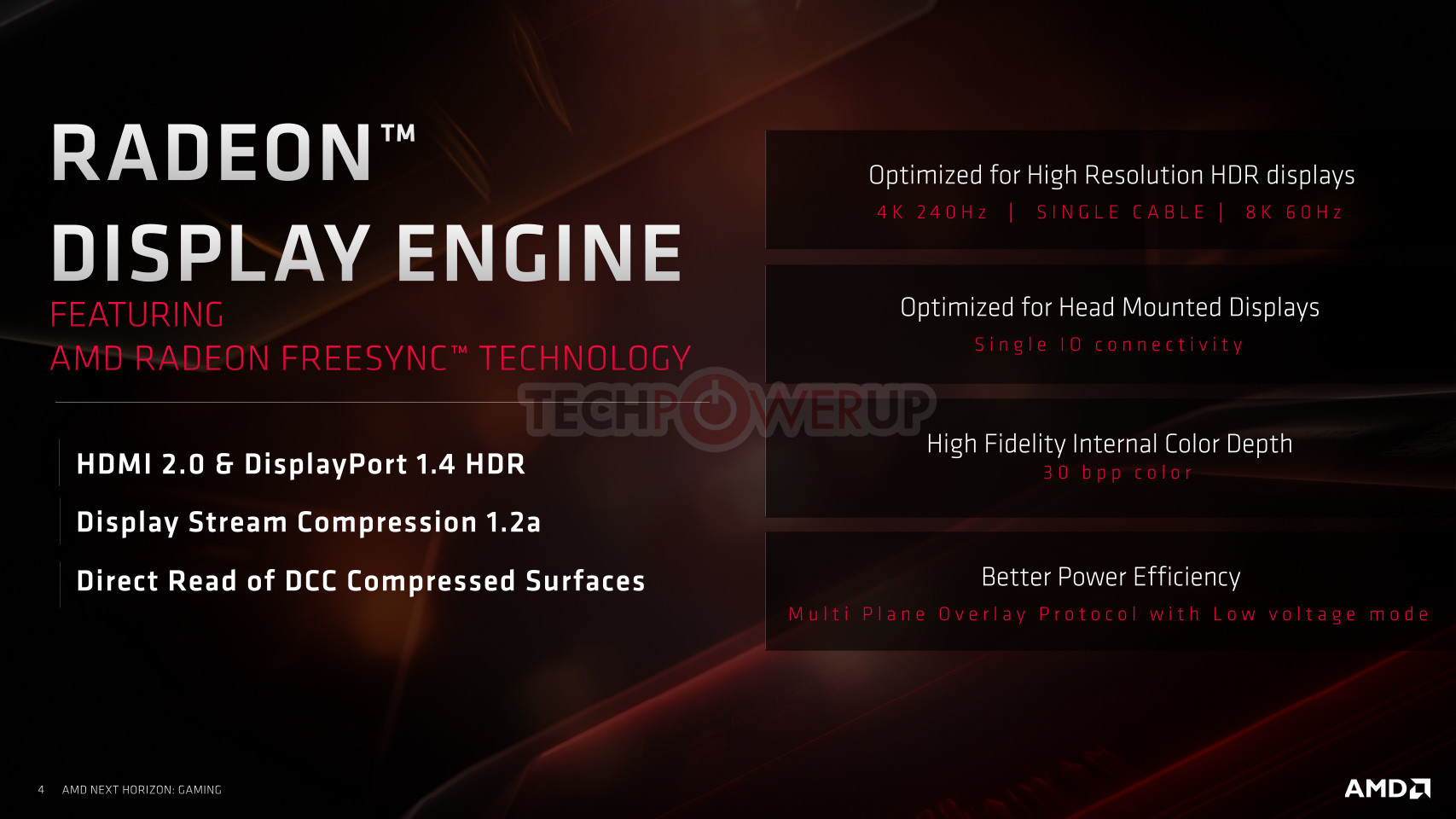

I want to be able to watch movies with them with the latest quality features turned on like HDR (High dynamic range) and HFR (High frame rate).

Also I want in the future to buy a 4K@120Hz or more when they will become available.

Why should I need a new GPU just for this just because they chose the cheaper path of putting a very old version of the HDMI instead of a somwhat newer one?

Comment