This makes sense. Google have stripped out everything from Linux kernel, and it is hardly Linux anymore on their big 900.000 server cluster. If you want to do something highly specialized to reach max performance, then it makes sense to strip out everything else. For instance, you dont need fancy I/O, complex scheduler, device drivers, etc.

I know that Google does a lot of customization, but I expect it's mostly going to be for their proprietary file systems and to cut some overhead out of certain key parts.

However, for instance, the IBM Blue Gene on top500 that runs Linux, does not really run Linux.

On systems like Bluegene I believe they use Linux clusters to manage the execution of jobs for the rest of the cluster. So it's going to be something like they will have a 1/4 of the cluster or a separate small cluster of machines that manage monitoring job execution, scheduling, and load balancing for the rest of the cluster. That would effectively be the user interface for the rest of the system.

What I trying to say is that something does not add up. Something is strange. Dont you agree?

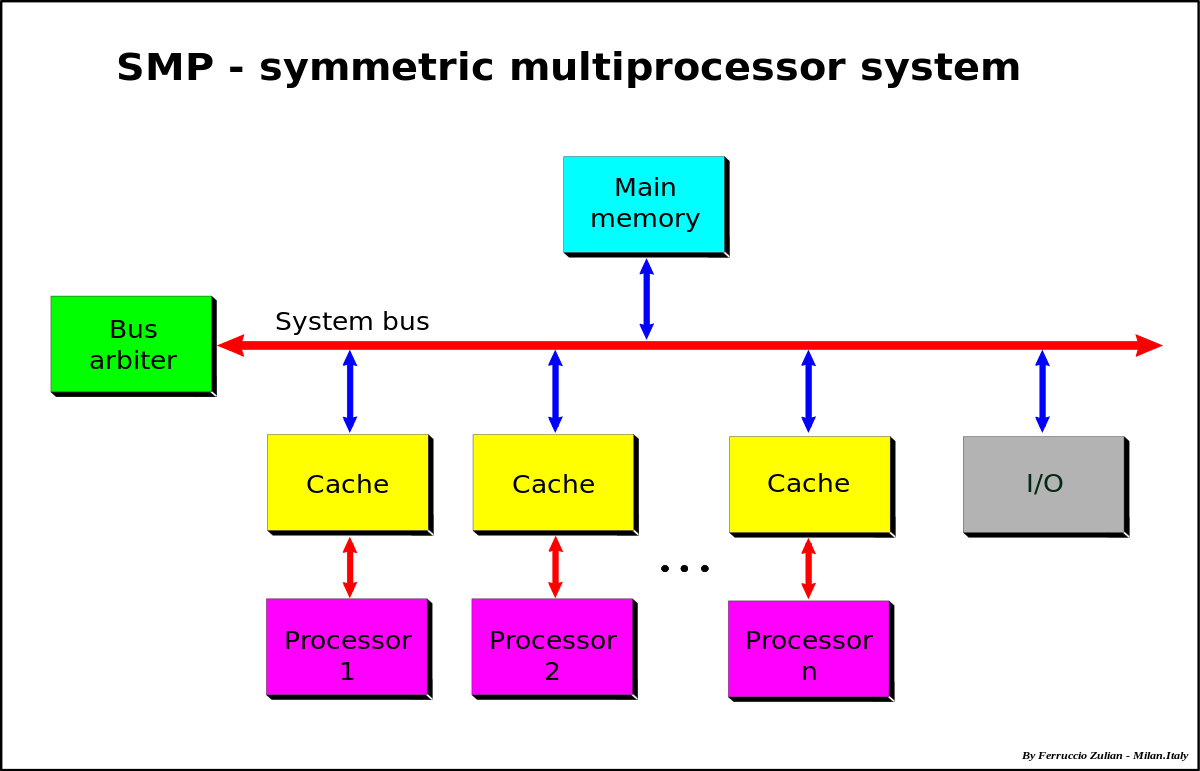

Linux supporters: Linux handles SMP just fine.

Me: Why dont Linux go for the big money then?

Linux supporters: because we are doing charity work.

Me: Say again?

Linux supporters: Linux handles SMP just fine.

Me: Why dont Linux go for the big money then?

Linux supporters: because we are doing charity work.

Me: Say again?

The majority of people that do work on the Linux kernel and most other systems are professionals that are paid to do it for a living. Linux is big business now and makes a lot of people a lot of money as a platform to run other software and services.

Comment